The image of a swarm of nodes and a token fee appear in the minds of people when they consider the concept of decentralized storage. That image isn’t complete. Walrus is silently making something that more closely resembles the real internet: a foundation network and a permissionless layer of service providers to enable it to be used by normal applications.

What is more important at present is that Walrus does not require every user to interact with dozens of storage nodes, to encode, or to work with certificates. It relies on an operator market of publishers, aggregation, caches, such that apps can have a Web2 level of ease, but Web3 level verification. That is an adult manner of designing infrastructure.

The internet is not node-to-node. It is “service‑to‑user.”

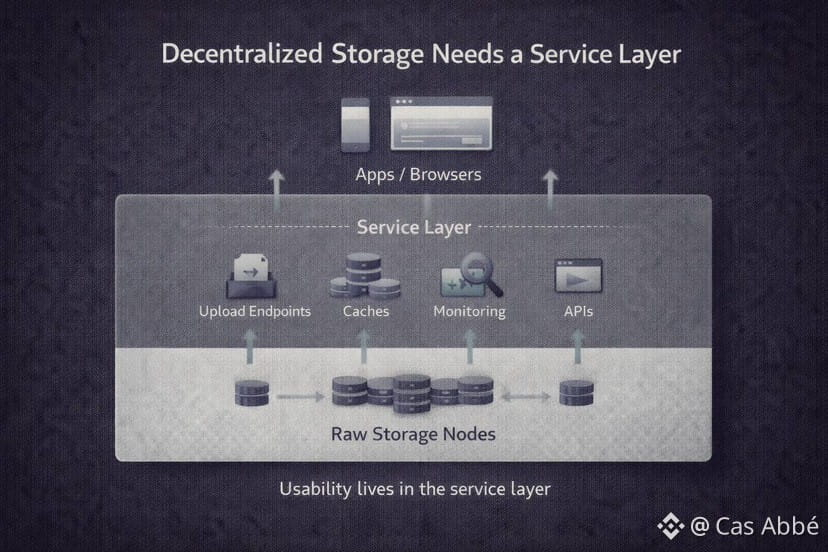

A large portion of the internet that you are using is not literally a point-to-point connection between your phone and a raw server. It consists of a series of services: upload endpoints, CDNs, caches, gateways, monitoring, retries. Web2 is fast due to layers of operators which make everything smooth.

Walrus does not make this reality optional as part of its architecture. Role aggregators, caches and publishers are explicitly defined to be optional actors that can run permissionlessly in the own design documentation of Walrus.

That is the philosophical change. Walrus does not only decentralize storage. It is also decentralized in cloud services that encloses storage.

Publishers Publish without concealed trust.

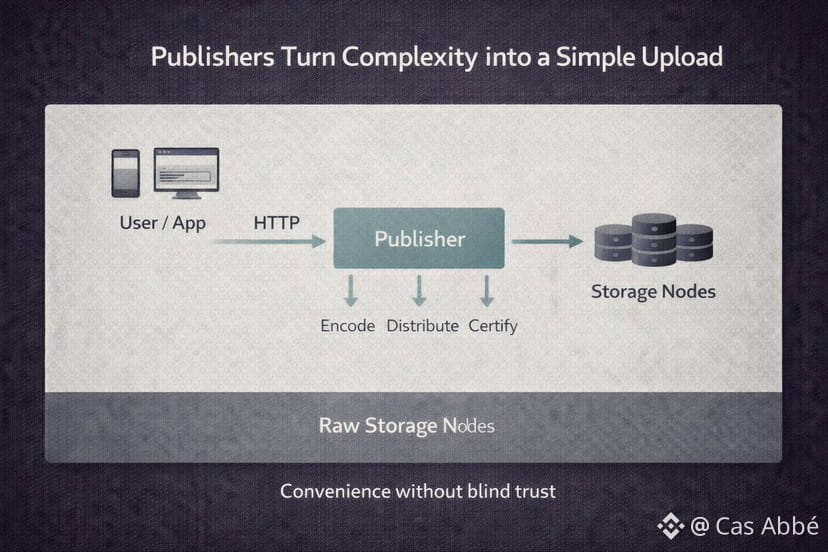

A publisher in Walrus is nothing more than a professional uploader. A publisher does not need to make their app do everything, a blob can be sent across standard Web2 technology, such as HTTP, encrypted, and the fragments sent to storage nodes, signatures received, and aggregated into a certificate, and whatever it needs to do on-chain.

This matters for two reasons.

First, it makes Walrus applicable to actual products. The majority of the teams do not desire users to take care of complicated storage streams within a browser. They want “upload – done.”

Second, Walrus does not put his faith in the publisher on blind faith. By verifying on-chain evidence the user is able to confirm that the publisher did its job and verify the reads later. Convenience has been admitted, but the system is still driven into verifiable truth.

Such is the type of trade off that allows a network to grow: have experts handle the hard work, but make the evidence transparent.

Aggregators and caches: The Walrus CDN layer, with receipts.

Even when it is cheap in principle, reading in a decentralized storage may be costly in effort. Somebody must bring out enough pieces, rebuild the lump, and get them to applications in a normal manner.

The answer by Walrus is the aggregator: a customer which reassembles blobs and delivers them on more standard Web2 infrastructure, such as HTTP. Walrus further: caches are caching caches, which reduce the latency, load on the storage nodes, as a CDN, and distribute the cost of reconstruction among many requests.

The important aspect that allows this to be called, Walrus, and not Web2 again, is that a client can always check that reading through cache infrastructure is correct.

And so the cache can be quick and at the same time you may check it. That makes the difference between normal user experience and cryptographic correctness.

Why it constitutes a real operator economy, as opposed to a protocol.

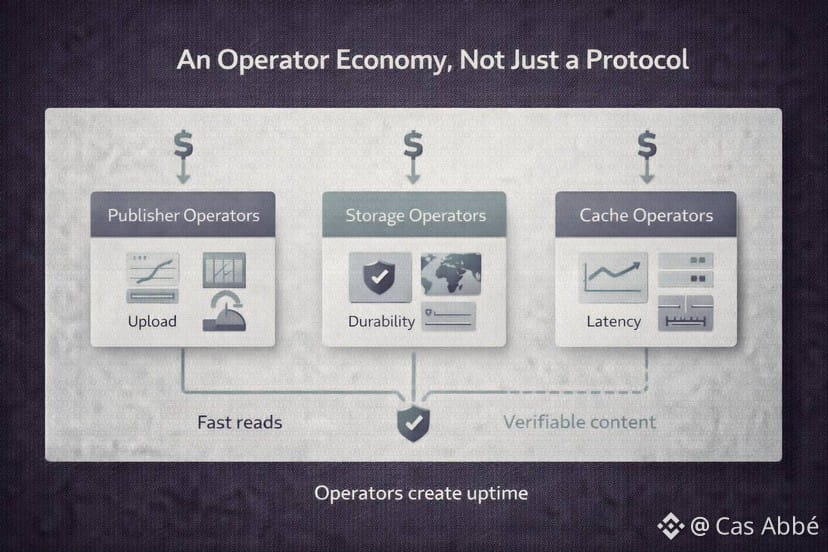

By zooming, Walrus provides the ability to store data in nodes as well as operators who offer performance and reliability as a service.

Ingest Publishers Publishers are able to specialize in high-throughput ingest to a region. Specialization can be done in media low-latency media reads by cache operators. Aggregator operators may provide easy APIs to those developers who do not wish to recreate blobs themselves. Walrus goes so far as to specify how to execute these services, which indicates that the service layer is a conscious component of the strategy.

This is what renders a network an infrastructure. Infrastructure has roles. Roles have incentives. Businesses are founded on incentives. Businesses create uptime.

As soon as uptime becomes a profession of someone, adoption ceases to be an abstract.

Walrus enables integration to a natural feeling of web development.

The next explanation of why this service layer is important is that Walrus openly supports Web2 interfaces.

Walrus documentation offers an HTTP API on publicly accessible services and describes the operations of stores/read and Quilt management along the web endpoints. That is massive in case you are developing an app. It implies that you do not need to cram Walrus into a rarefied workflow on the first day.

It also provides a psychological unlock to the developers: they have faith in what they can test in a short time. A cURL-able instrumentable and monitored HTTP endpoint is a huge barrier to entry.

The greater trend here is that Walrus is creating a normal developer experience as a decentralization notion, rather than despite it.

Storage nodes are not the only aspect of trust.

There are also problems with clients and encoding errors.

The majority of individuals believe that the primary threat is poor storage nodes. The more subtle aspect that is brought out by Walrus docs is that since encoding is performed by the client, which may be an end user, a publisher, an aggregator or a cache, it may be mistakenly or deliberately inaccurate.

This is relevant to service layer. With publishers and aggregators potentially present, then the network has to deal with a world that does not have all clients that are perfect. By being clear about this, the group demonstrates that they can think like systems engineers: something can go wrong in many different places and that rightness has to withstand dirty reality.

That is the distinction of the protocol that is demo-able versus the protocol that lives.

The presence of observability points at seriousness: Walrus is setting up a monitoring culture.

The following is an undeniable fact; real infrastructure flourishes or dies according to monitoring. Operators are unable to see it and hence they are unable to operate it.

The ecosystem already demands monitoring and visibility be it the resources list of the awesome-walrus. It has 3 dimensional globe visualizing the network and live monitoring of nodes, aggregators and publishers.

This is not a hype tooling and this is what makes a decentralized system to be functional.

When making surveillance a community service, you will witness a network transitioning to what is not a simple technology but in fact a system that is run by people.

The silent thesis: Walrus does not only decentralize the disk space but the cloud pattern.

The silent thesis: Walrus does not only decentralize the disk space but the cloud pattern.

In summary of this article in a single sentence, it would be: Walrus is decentralizing storage nodes and the whole pattern of cloud service around storage: uploads, reads, caches and operator tools and is holding verifiability as the anchor.

To me, that’s rare. Not only do many projects remain pure but unusable, many become usable and lose verifiability. Walrus strives to keep both.

This is why I am not pessimistic. Not because it is big-store, but because Walrus plans on how the internet really works services, operators, monitoring, performance and does not lose the capacity to ascertain truth.

It is not generic and is real infrastructure thinking.