AI has become very good at responding. It can summarize, predict, and generate at impressive speed. However, most AI systems still suffer from a fundamental limitation: they do not truly remember. Each interaction is largely isolated. Context is passed temporarily, stored offchain, or reconstructed again and again. This works for short conversations, but it breaks down when AI is expected to act continuously, manage systems, or evolve over time.

This is where myNeutron changes the conversation, and why it matters that it is built on @Vanarchain rather than treated as an external AI tool.

Memory is not a feature. It is a system property. For humans, memory shapes identity, decision-making, and accountability. For AI agents, memory determines whether they can learn from past actions, coordinate complex workflows, and operate autonomously without constantly starting from zero. myNeutron demonstrates what happens when memory is not bolted on through databases or offchain services, but embedded directly into the execution environment.

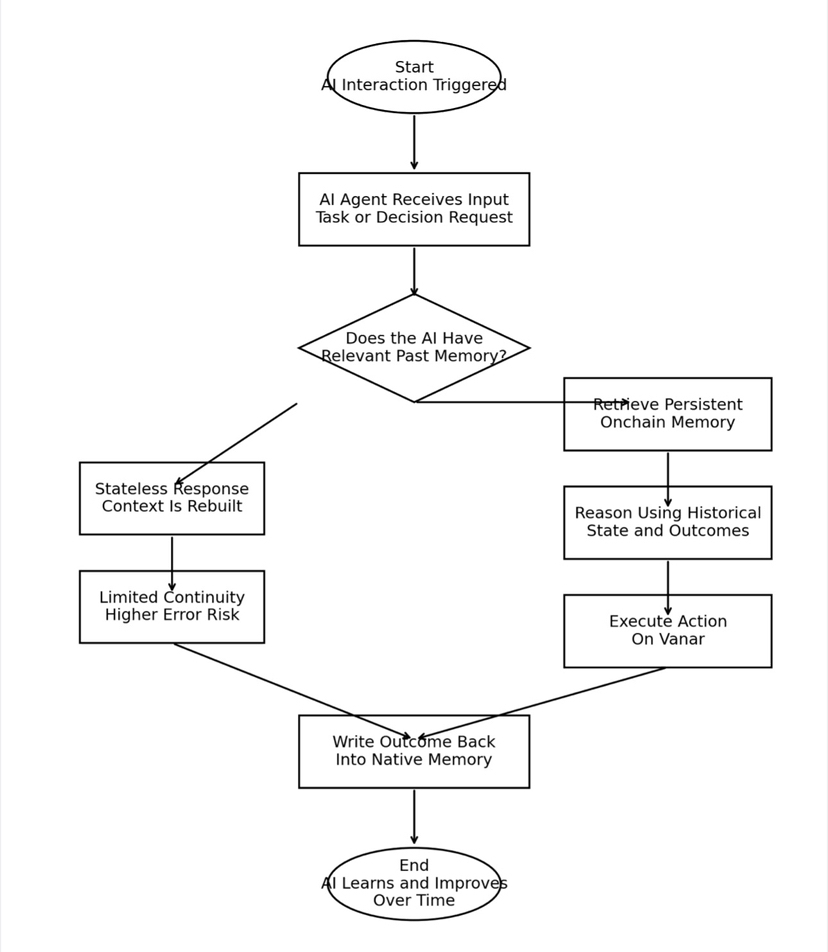

Most AI applications today rely on external storage. Data is written to centralized servers, vector databases, or proprietary clouds. The AI model queries this data when needed, but the memory itself lives outside the system that executes decisions. This creates fragility. Memory can be altered, lost, or selectively presented. There is no shared source of truth. For autonomous agents, this becomes a serious constraint because their understanding of the world is only as reliable as the infrastructure holding their past.

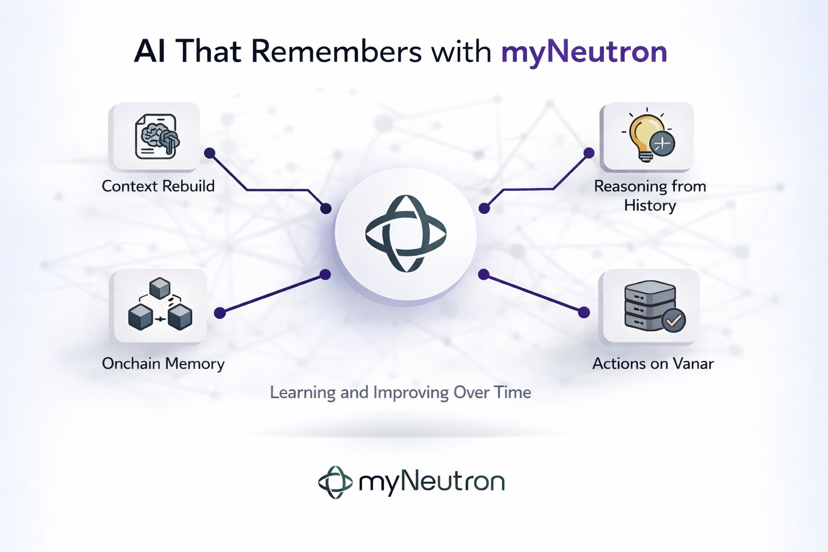

myNeutron approaches memory differently. Instead of treating memory as an external dependency, it treats it as part of the protocol layer. Interactions, state changes, and learned outcomes are written into a persistent environment that the AI agent can reliably reference. This means the agent does not just respond based on prompts. It responds based on history.

The role of Vanar here is critical. Vanar is designed as an environment where intelligence, execution, and enforcement coexist. When myNeutron stores memory, that memory is not just data. It is verifiable state. It cannot be quietly rewritten. It cannot be selectively hidden. This gives AI agents something they rarely have today: continuity they can trust.

This continuity enables behavior that stateless AI simply cannot achieve. An agent with memory can recognize patterns over time. It can remember previous failures and adjust strategy. It can maintain long-running objectives instead of reacting moment to moment. myNeutron shows this in practice by allowing AI agents to build on their own past actions without external orchestration.

Another important distinction is that this memory is native, not simulated. Many systems attempt to approximate memory by replaying conversation logs or injecting summaries back into prompts. This is not real memory. It is reconstruction. Native memory means the agent’s past exists as part of the same system that governs its present actions. myNeutron demonstrates this by operating within Vanar’s execution layer rather than alongside it.

This has implications beyond AI design. It changes how we think about accountability. When an AI agent acts, its reasoning can be traced through its stored state. Decisions are not black boxes floating in inference space. They are outcomes of a persistent history. This matters for finance, governance, content moderation, and any domain where AI actions have real consequences.

myNeutron also highlights why AI agents belong onchain in the first place. If agents are going to manage assets, enforce rules, or coordinate systems, their memory must be as reliable as their execution. Offchain memory creates an asymmetry where actions are enforceable but reasoning is not. Vanar closes that gap by giving both the same foundation.

What makes this especially compelling is that myNeutron does not frame memory as a futuristic concept. It treats it as a practical requirement. AI agents that cannot remember are limited to assistance. AI agents that can remember become operators. They move from tools to participants. Vanar provides the conditions for that transition, and myNeutron proves it is not theoretical.

Over time, this approach reshapes how AI systems scale. Instead of growing smarter only through model updates, agents grow through lived experience. Each interaction adds context. Each outcome becomes reference material. The system evolves organically rather than through constant retraining. This is closer to how intelligence actually develops.

My take is that myNeutron quietly demonstrates something larger than a single product. It shows that native AI memory is not about storing more data. It is about giving AI a stable sense of past and present within the same system. Vanar makes that possible by treating memory as infrastructure, not metadata. As AI agents take on more responsibility, this distinction will matter more than raw model performance. Systems that can remember will outgrow systems that can only respond.