When most people hear decentralized storage, they picture a loose swarm of nodes and a token fee attached somewhere in the background. I used to think that way too. But that mental model misses what Walrus is quietly building. Walrus is shaping something that looks much closer to how the real internet works: a base network plus an open service layer that everyday applications can actually rely on.

What stands out to me is that Walrus does not expect every user or app to deal directly with encoding logic, node coordination, or certificate handling. Instead, it leans into an operator marketplace made up of publishers, aggregators, and caches. That setup lets applications feel familiar, almost Web2 like, while still giving me cryptographic proof under the hood. That is a grown up way to design infrastructure.

How the Internet Actually Works and Why Walrus Copies That Reality

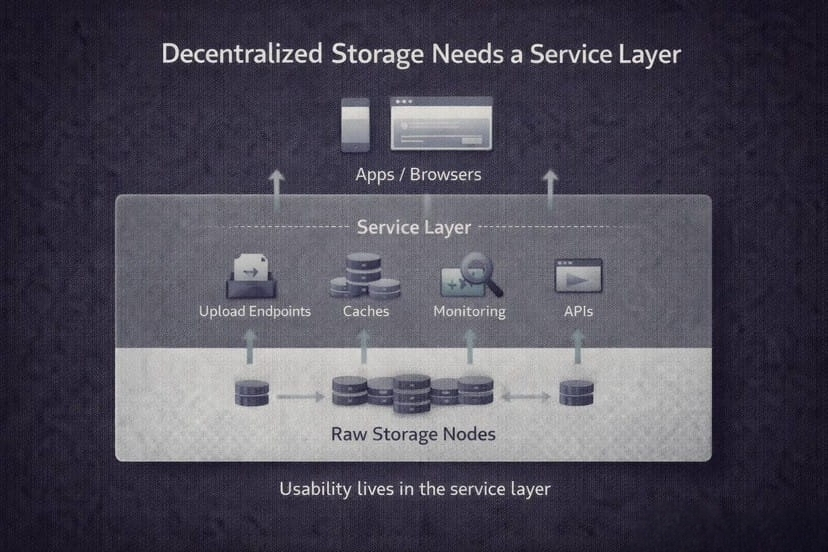

The internet I use every day is not a raw connection between my device and a single server. It is a chain of services. Upload endpoints handle ingestion. CDNs speed up reads. Caches absorb load. Monitoring systems retry when things fail. Performance comes from layers of operators smoothing out rough edges.

Walrus does not pretend this reality does not exist. Its own documentation explicitly defines publishers, aggregators, and caches as optional but first class participants. These roles are permissionless and expected, not hacks added later.

That shift matters. Walrus is not just decentralizing storage nodes. It is decentralizing the cloud service patterns that sit around storage.

Publishers Make Uploads Simple Without Asking for Blind Trust

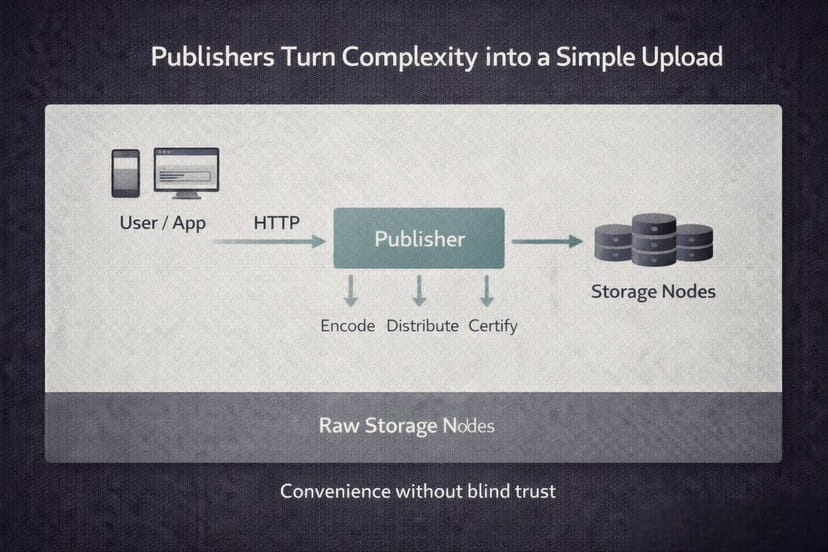

In Walrus, a publisher is basically a professional uploader. Instead of forcing every app to manage fragmenting data and talking to storage nodes directly, a publisher can accept data over standard web methods like HTTP. The data can be encrypted, split, distributed to nodes, and turned into an on chain certificate by the publisher.

I see two big benefits here. First, this makes Walrus usable for real products. Most teams want an experience that feels like upload and done, not a custom storage pipeline inside a browser. Second, Walrus does not blindly trust the publisher. I can still verify on chain that the work was done correctly and confirm reads later.

That balance is important. Specialists handle complexity, but proof remains public. Convenience is allowed without giving up truth.

Aggregators and Caches Act Like a CDN With Proofs

Reading from decentralized storage can be awkward even when it is cheap. Someone still has to collect enough pieces, rebuild the data, and serve it in a normal way to applications.

Walrus answers this with aggregators that reconstruct blobs and deliver them through standard interfaces like HTTP. On top of that, caches reduce latency and load, much like a CDN. They spread reconstruction costs across many users instead of repeating work every time.

What makes this different from plain Web2 is verifiability. Even if I read through a cache, I can still check that the data is correct. Speed and correctness are not traded off against each other.

Why This Looks Like an Operator Economy Not Just a Protocol

Zooming out, Walrus is not only offering storage. It is enabling businesses to exist on top of storage. Publishers can specialize in fast regional uploads. Cache operators can focus on low latency media delivery. Aggregators can offer clean APIs for developers who do not want to rebuild blobs themselves.

Walrus even documents how these services should work, which tells me the service layer is intentional, not accidental.

That is what infrastructure looks like. Roles exist. Roles have incentives. Incentives create uptime. And once uptime is someone’s job, adoption stops being theoretical.

Walrus Fits Naturally Into Normal Web Development

Another reason this service layer matters is that Walrus embraces familiar interfaces. Its documentation includes HTTP APIs for storing and reading data and for managing higher level constructs.

From my perspective as a builder, that is huge. I do not have to twist my application into a strange workflow on day one. I can test things quickly. I can monitor endpoints. I can use tools I already trust. A simple curl friendly endpoint lowers the barrier more than most people admit.

The bigger pattern I see is that Walrus treats developer experience as part of decentralization, not something that fights against it.

Trust Is Not Only About Storage Nodes

One subtle point I appreciate in the Walrus docs is the focus on client side errors. Encoding is done by clients, and those clients might be users, publishers, aggregators, or caches. Mistakes or malicious behavior can happen at any of those layers.

By acknowledging this, Walrus shows systems thinking. Real networks fail in messy ways. Correctness has to survive bad inputs and imperfect actors. That mindset separates demo protocols from systems that actually live in production.

Monitoring Shows This Is Meant to Be Operated

There is a simple rule in infrastructure: if you cannot see it, you cannot run it. Walrus seems to understand this. The ecosystem already includes visualizations and monitoring tools that show nodes, publishers, and aggregators in real time.

This is not flashy marketing. It is operational necessity. When monitoring becomes a shared community tool, a network starts to feel like a system run by people, not just code sitting on paper.

The Quiet Idea That Makes Walrus Different

If I had to summarize this whole piece in one line, it would be this. Walrus is not just decentralizing disk space. It is decentralizing the entire cloud pattern around storage while keeping verifiability as the anchor.

To me, that is rare. Many projects stay pure and unusable. Others become usable and lose their guarantees. Walrus is trying to hold both at the same time.

That is why I am not skeptical. Not because it promises big storage, but because it reflects how the internet actually works with services, operators, monitoring, and performance, without giving up the ability to prove what is true.

That is not generic thinking. That is real infrastructure design.