I have been tracking decentralized infrastructure projects on Sui for a while now, and @Walrus 🦭/acc caught my eye when I started looking into how AI could actually function in a truly on chain environment. What drew me in wasn't flashy announcements, but the practical problem it targets: handling large, unstructured data like massive datasets or model weights without the usual trade offs in cost, availability, or verifiability that plague most blockchains. Over months of reading their docs, following integrations, and observing ecosystem updates, it became clear to me that Walrus is positioning itself as a core storage layer specifically suited for AI native Web3 applications.

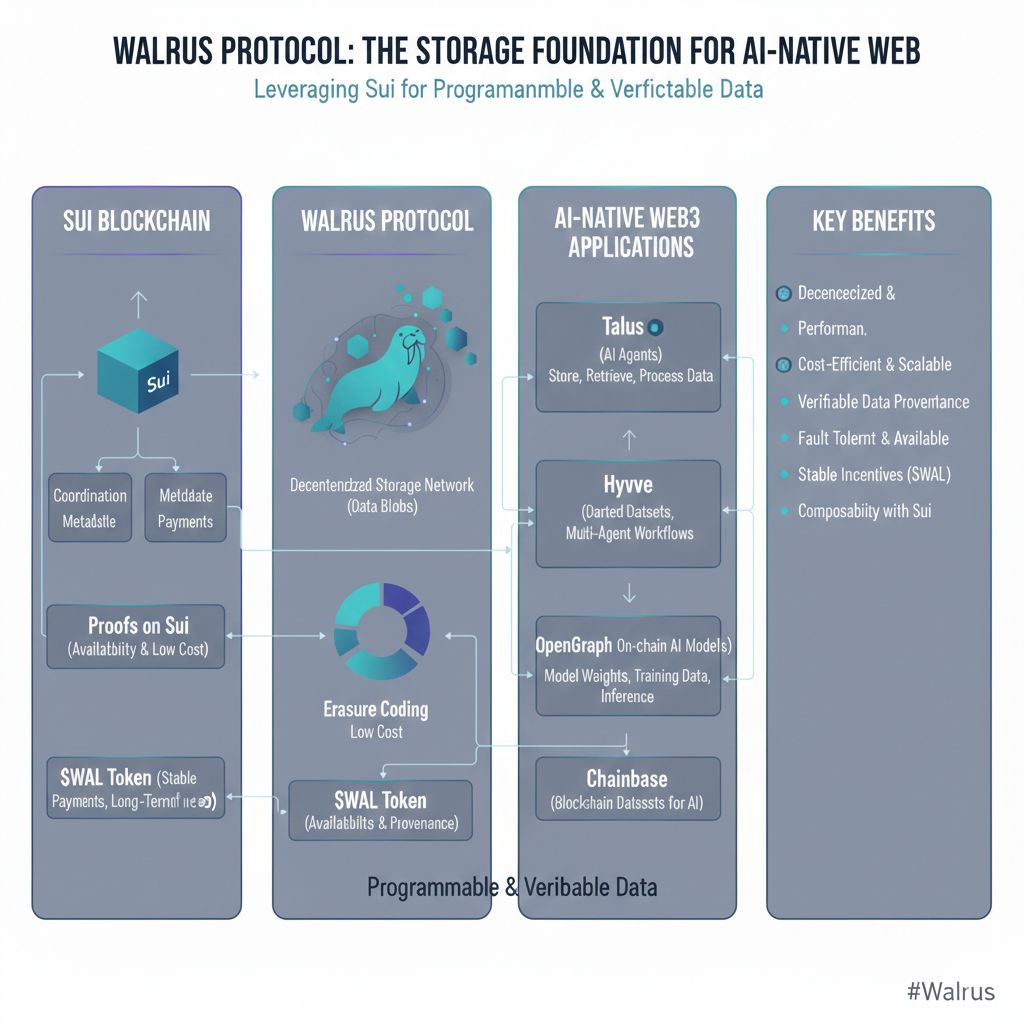

I noticed right away how Walrus leverages Sui's architecture. Built by Mysten Labs, it uses Sui for coordination, metadata, and payments, while the actual data lives in a decentralized network of storage nodes. This separation makes sense for scale Sui handles the programmable logic efficiently, and Walrus manages the heavy lifting of blobs (binary large objects). What stood out to me was the erasure coding approach: instead of full replication across many nodes, data gets fragmented and encoded with redundancy, achieving around 4x-5x effective replication. This keeps costs down significantly compared to protocols that duplicate everything, while still providing strong fault tolerance against node failures or malicious behavior. For AI use cases, where files routinely hit gigabytes or terabytes, this efficiency matters a lot.

I realized the real fit for AI comes from how Walrus ensures data availability and provenance. In Web3 AI apps think autonomous agents, decentralized training, or verifiable inference data can't just sit somewhere off chain with fingers crossed. Walrus anchors proofs on Sui, so smart contracts can query if a blob is live, how long it's guaranteed, and whether it's been tampered with. Developers can store clean, verified datasets or model weights with traceable origins, which helps prevent issues like data poisoning. I started thinking differently about this when I saw mentions of partners like Talus, where AI agents use Walrus to store, retrieve, and process data on chain seamlessly. It's not about storing everything on chain in a literal sense, but making large data programmable and verifiable through Sui's object model.

Another thing that became apparent is the support for emerging AI Web3 patterns. Projects like Hyvve build decentralized data marketplaces on Sui, curating datasets via multi agent workflows and storing them on Walrus for purchase and use in training. OpenGraph deploys AI models on-chain, using Walrus for cost effective storage of weights and training data to enable inference without central choke points. Even Chainbase integrates it for massive blockchain datasets feeding into AI pipelines. These aren't hypothetical; they're live examples showing how Walrus turns storage into a reliable foundation rather than a bottleneck.

From my observations, the $WAL token ties this together practically. It handles upfront payments for fixed-duration storage, with funds distributed over time to nodes, creating stable incentives. This predictability is key for AI developers planning long term workloads no surprise gas spikes derailing a training run. The protocol's focus on low cost, high availability blobs makes it feasible to build things like open data economies or trustworthy AI outputs, where provenance and access matter as much as the computation itself.

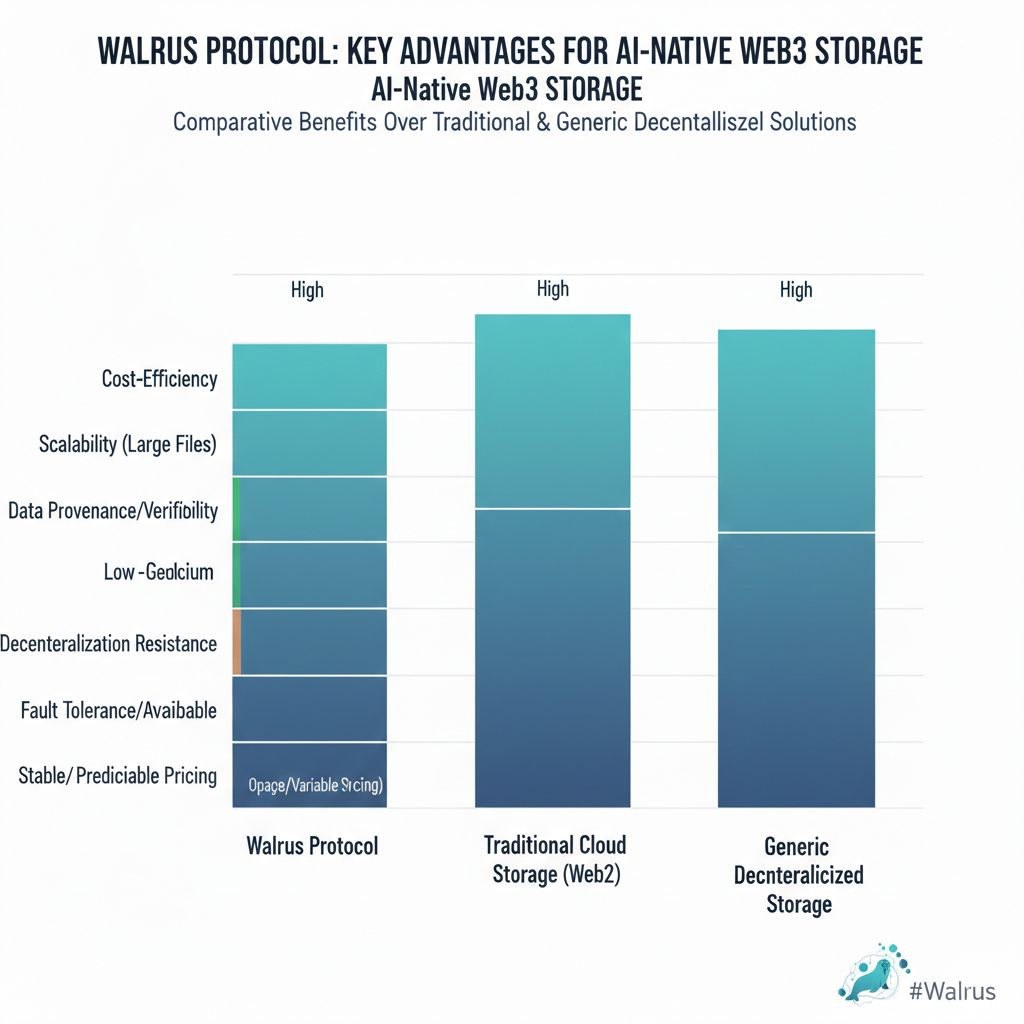

Reflecting on broader trends, traditional cloud storage dominates Web2 AI, but it introduces centralization risks censoring, downtime, or opaque billing. Walrus addresses this by making storage decentralized yet performant, with features like Seal adding access controls for sensitive data (think proprietary models or private datasets). It's chain-agnostic at the storage layer, but deeply composable with Sui, which gives it an edge in speed and programmability.

So, Walrus isn't trying to be everything to everyone; it's refining decentralized storage for the data intensive reality of AI in Web3. Through integrations with projects tackling decentralized agents, marketplaces, and verifiable compute, it's emerging as that foundational piece reliable enough for real builders, efficient enough to scale. If you're exploring where AI and Web3 truly intersect, this protocol's design makes a compelling case.