Most distributed systems don’t fail loudly at first. They hesitate. A message arrives late. A validator goes quiet for a few seconds longer than expected. Nothing looks broken, but something feels slightly off. PlasmaBFT is built with that quiet uncertainty in mind. It does not assume clean edges or perfect behavior. It assumes the network will wobble, often in small, inconvenient ways.

That assumption changes the tone of the design. Instead of chasing ideal conditions, PlasmaBFT treats failure as part of the normal texture of the system. Not dramatic failure, but the slow, everyday kind. Machines reboot. Operators make mistakes. Latency drifts. If the protocol cannot live with those realities, it probably will not last.

What matters is not avoiding failure, but how the system responds when it shows up.

Validator Downtime Handling:

Downtime is rarely malicious. More often, it’s boring. A server update runs too long. A data center hiccups. A validator misses a few rounds and then comes back like nothing happened.

PlasmaBFT allows for that kind of absence. Consensus does not require everyone to be present at all times. As long as more than two-thirds of the voting power stays responsive, the chain can keep moving. That threshold is not arbitrary. It is the line where safety still holds, even if a large minority disappears for a while.

What is interesting is what PlasmaBFT does not do. It does not panic. A missing validator is not immediately ejected or heavily punished. The protocol keeps going, quietly adjusting around the gap. Over longer periods, persistent downtime becomes a governance and incentive question rather than a purely technical one.

There is a subtle risk here. Networks tend to reward those who can stay online no matter what. Over time, this can favor larger operators with better infrastructure. PlasmaBFT does not solve that problem on its own. It simply exposes it, leaving the community to decide how much concentration is acceptable.

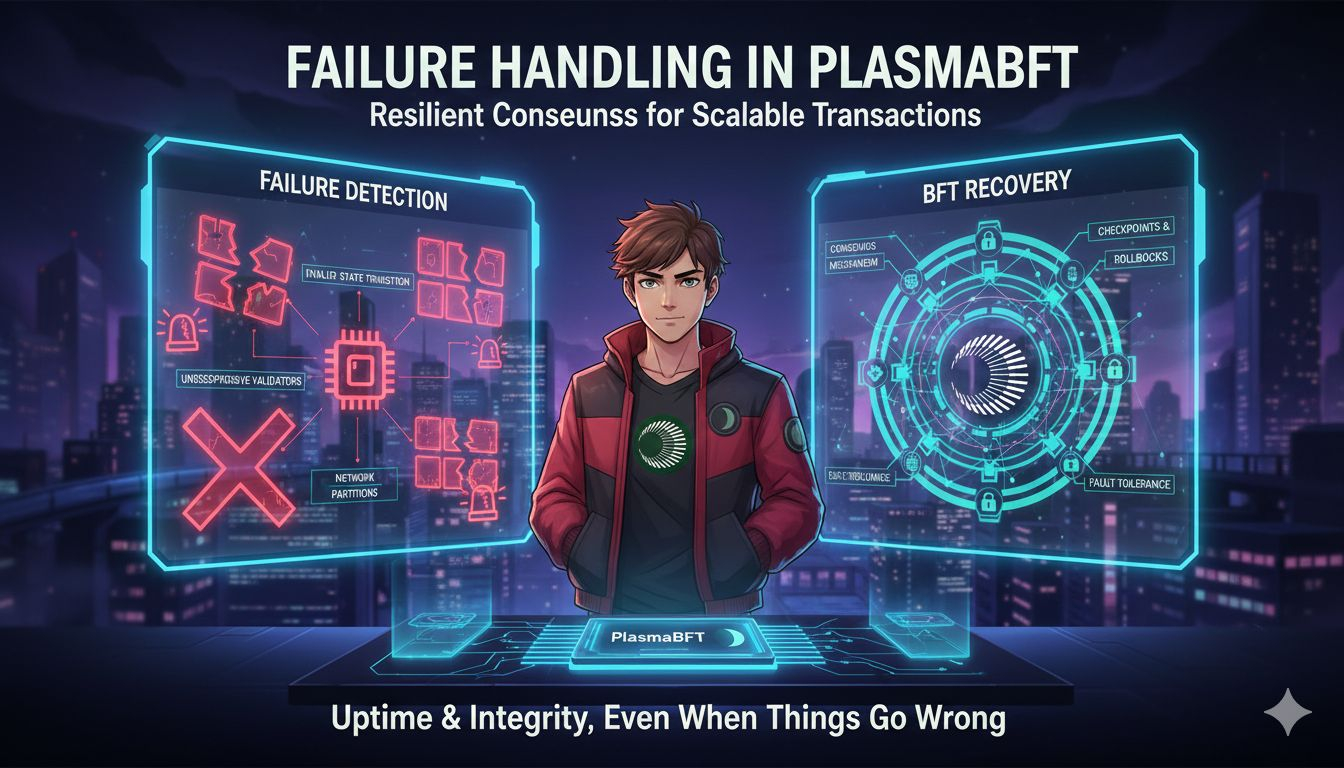

Byzantine Behavior Response:

Malicious behavior is rarer, but more dangerous. Unlike downtime, it is intentional and often strategic. A validator that signs conflicting messages or tries to confuse the network is not just unreliable. It is actively harmful.

PlasmaBFT responds to this with evidence rather than assumption. Every vote and proposal is signed. Conflicting behavior leaves cryptographic fingerprints. If a validator claims two different truths in the same round, that contradiction can be proven and shared.

What happens next depends on the network’s rules. Slashing, removal, or loss of influence are common responses, but they are not hardcoded reactions in every case. That flexibility reflects a reality many protocols avoid admitting. Punishment is not only technical. It is political, economic, and social.

There is also an uncomfortable truth underneath. Byzantine fault tolerance works best when bad actors are a minority. If enough validators decide to misbehave together, the math no longer protects the system. PlasmaBFT does not pretend otherwise. It draws a boundary and says, beyond this point, trust breaks.

Consensus Timeout Logic:

Time is an underappreciated failure mode. Messages arrive eventually, but not always when you expect them to. PlasmaBFT uses timeouts to avoid waiting forever for a world that never quite lines up.

Each round of consensus has a clock. If a proposer does not act, the system moves on. If votes arrive too slowly, the round ends and another begins. These timeouts are not fixed in stone. They can expand when the network struggles, giving slower participants room to catch up.

From the outside, this looks like uneven performance. Blocks finalize quickly for a while, then slow down without warning. That inconsistency is not a bug. It is the cost of tolerating messy conditions instead of enforcing rigid timing.

Still, timeouts are dangerous if misjudged. Too aggressive, and the network churns endlessly. Too relaxed, and everything feels stuck. PlasmaBFT leaves this balance adjustable, which is both practical and risky. A poorly tuned network can quietly degrade without ever technically failing.

Recovery Mechanisms:

Failure handling does not end when things stop working. Recovery is where systems either regain trust or lose it for good.

PlasmaBFT relies on a clear notion of finality. Once a block is finalized, it is not revisited. Validators that fall behind can sync state from peers and deterministically reach the same conclusion. There is no ambiguity about which history matters.

In more severe situations, such as extended loss of quorum, recovery becomes less automatic. Human coordination enters the picture. Validator sets may change. Parameters may be adjusted. Sometimes, the community simply has to agree on a path forward.

This is often criticized as a weakness. In practice, it reflects honesty. Fully automated recovery in the face of social collapse is mostly a myth. PlasmaBFT acknowledges that people are part of the system, especially when things go wrong.

Safety vs Liveness Trade-offs:

Every consensus protocol chooses what it fears more. PlasmaBFT fears incorrect finality more than temporary stoppage. Safety comes first.

That choice shapes the experience during stress. When conditions worsen, the network may slow or pause instead of pushing forward at all costs. Transactions wait. From a user’s perspective, this can feel like failure, even though the core guarantees remain intact.

Liveness still matters. Timeout logic, proposer rotation, and tolerance for downtime all exist to keep progress possible. But none of them override the rule that finalized data must not conflict.

This balance is not static. Incentives, validator behavior, and network scale all push it in different directions over time. PlasmaBFT provides a framework, not a final answer.

Risks, Gaps, and Open Questions:

PlasmaBFT’s design is careful, but care does not eliminate risk. Centralization pressure is always present. Complex recovery paths depend on governance that may not always act cleanly or quickly.

Scaling adds another layer of uncertainty. As validator counts increase and latency spreads across regions, message overhead and timeout tuning become harder problems. Early deployments suggest the system can cope, but sustained stress over years is a different test.

There is also the human factor. Slashing rules, parameter updates, and emergency decisions rely on alignment. That alignment can drift. If it does, technical correctness may not be enough to hold things together.

PlasmaBFT does not sell certainty. It works from the assumption that systems fray at the edges and that failure is rarely dramatic when it begins. If the design holds, its strength will not be obvious in perfect conditions, but in the quiet moments when things almost fall apart and then don’t.

@Plasma $XPL #plasma