How Vanar Chain Became the Smartest "Nail Seller" in the Room

The Friday Night Realization

You know that feeling when you're almost done with something massive, and then you hit a wall?

Last Friday, I was assembling an IKEA wardrobe. Beautiful piece, thick boards, gorgeous illustrations in the manual. Everything clicked together perfectly until the final step. One screw. A single, tiny screw that probably costs five cents was missing. And because of that, a 400 wardrobe wobbled like it was drunk. Completely unusable.

I threw my screwdriver across the room. I cursed. I questioned every decision that led me to this moment.

And then it hit me.

This is exactly what's happening in AI right now.

We have Marc Andreessen from a16z talking about AI solving the population crisis . We have Fetch.ai building these beautiful multi-agent collaboration systems with ASI:One, creating orchestration layers that look like magic . We have grand visions, macro theses, and cabinets that should hold the future.

But nobody's talking about the screw.

The Clever Output Trap

Let's get one thing straight. Today's AI is amazing at clever output. ChatGPT can write you a poem that makes you cry. Midjourney can create art that belongs in museums. These models can pass the bar exam, write code, and sound more articulate than most humans on their best day.

But here's the dirty secret nobody wants to admit: Clever output is not continuous operation.

I learned this the hard way reading research from late 2025. A team studying LLM agents discovered something brutal. Even if an AI has 99% accuracy on every single step, after just 100 steps, its success rate drops to 36.6%. After 200 steps? 13.4%. After 500 steps? You're basically at zero .

Think about that. A 99% reliable AI becomes completely unreliable after a few hundred actions.

The research calls this the "reiability wall" . It's why AutoGPT—remember that hype?—reliably fails beyond 100 steps. It's why GPT-4 tanks on the Towers of Hanoi puzzle after 200 moves. The problem isn't intelligence. It's context collapse.

Why Agents Forget (And Why It Matters)

The technical folks call it "memory decay" . I call it the goldfish problem.

When you're chatting with an AI, it feels like it remembers everything. But that's an illusion. What's actually happening is the model is stuffing information into a "context window"—basically a temporary notepad. As the conversation gets longer, that notepad fills up. Old information gets truncated, summarized, or just dumped .

In multi-agent systems, this becomes a disaster. One researcher described watching agents in a collaborative workspace "burn out" after minutes of work . They'd forget what other agents said. They'd lose track of decisions made earlier. They'd contradict themselves or ask questions that were already answered.

Now imagine this in a financial context. You set up an AI to manage your crypto portfolio. Day 1: It remembers your risk tolerance, your goals, your preferences. Day 2: It starts forgetting details. Day 3: It can't verify data sources and just stops working .

This is what the Web3 world is facing right now. We have agents that can chat. We don't have agents that can work—not for the long haul, not reliably, not without constant babysitting.

Enter The Nail Seller

So I'm scrolling through Crypto Twitter last month, and I see this pattern. Under every a16z thread about AI saving humanity. Under every Fetch.ai announcement about agent collaboration. Under every grand vision post, there's this quiet reply from @Vanarchain:

"AI only moves productivity when it can act, remember, and operate continuously."

No hype. No emojis. No "we're going to change the world" fluff. Just that sentence. Over and over.

And I realized: these guys are playing a different game entirely.

While everyone else is building beautiful cabinets, Vanar is selling nails. Cold, hard, unsexy nails that nobody wants to think about but everybody needs.

The Intelligence Layer Nobody Asked For (But Everyone Needs)

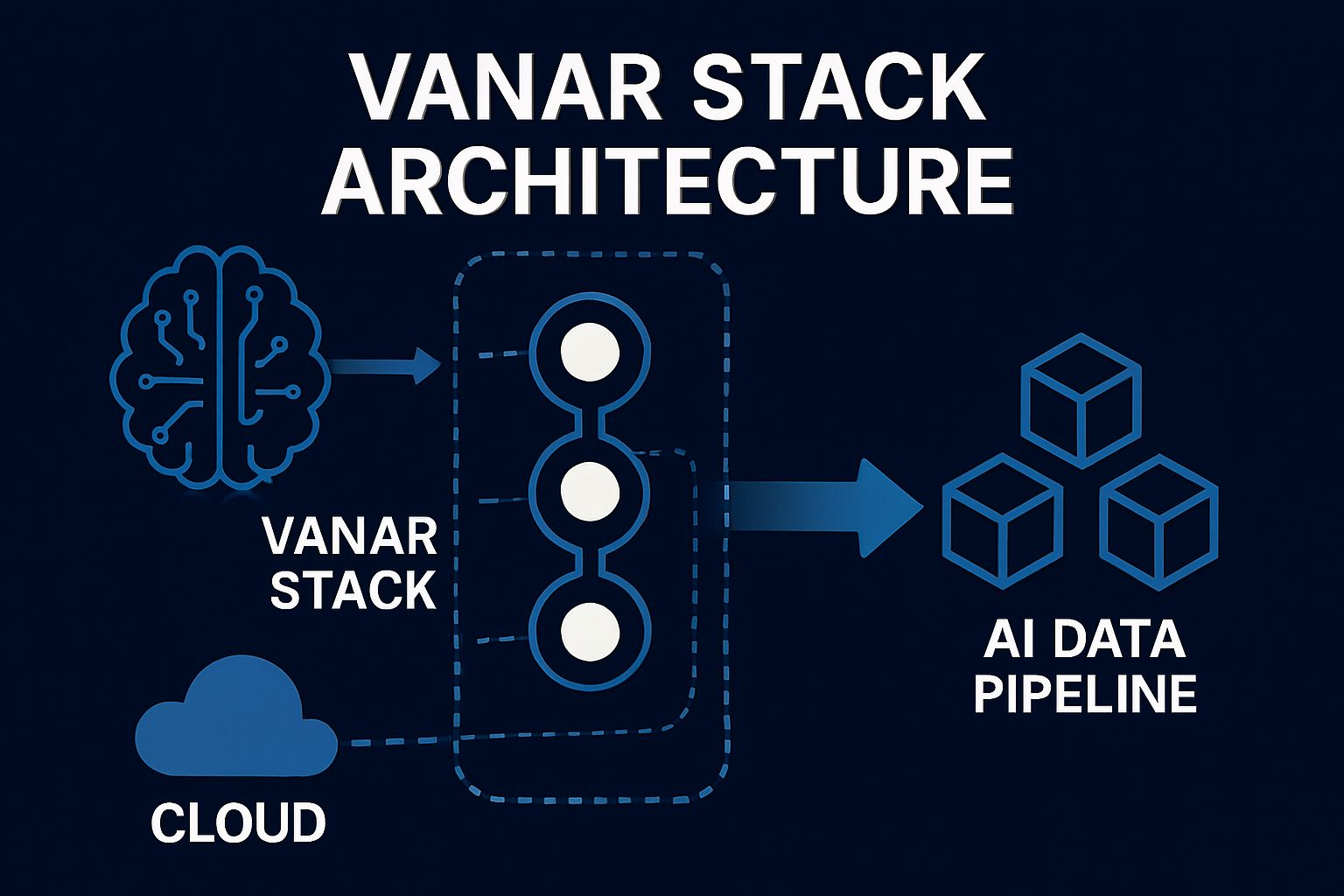

Here's where it gets interesting. Vanar isn't just talking about memory—they're building an entire intelligence layer on top of blockchain .

They have this five-layer stack that reads like a blueprint for fixing everything that's broken with current AI agents:

Layer 1: Vanar Chain - The foundation. Fast, cheap, EVM-compatible. Nothing fancy, just solid infrastructure .

Layer 2: Neutron - This is where it gets good. Neutron takes raw data and compresses it into something called "Seeds"—500:1 compression ratio—and stores them directly on-chain . Not IPFS hashes. Not external links. Actual semantic memory living on the blockchain. A property deed becomes a searchable proof. A PDF becomes agent-readable memory .

Layer 3: Kayon - The reasoning engine. This thing can query that memory, validate compliance across 47+ jurisdictions, and trigger actions without needing oracles or off-chain compute . It went live in pilot mode October 2025, letting people check balances and make transfers using natural language .

Layer 4: Axon - Intelligent automation. Workflow orchestration that doesn't lose context between steps .

Layer 5: Flows - The application layer where this all becomes PayFi, RWA tokenization, gaming .

The key insight here? Memory is a first-class primitive, not an afterthought. When an agent remembers something on Vanar, it's not storing a text snippet in a database. It's creating a verifiable, on-chain, semantic Seed that other agents can query, reason over, and trust .

The Parasitic Narrative (And Why It's Genius)

Vanar's marketing strategy is one of the most clever things I've seen in this space.

They don't try to out-hype the a16zs of the world. They don't compete with Fetch.ai's beautiful agent collaboration demos. They simply position themselves as the prerequisite for all of it.

When a16z talks about AI solving macro problems, Vanar replies: "Cool, but can your AI remember what it decided yesterday?"

When Fetch.ai shows multi-agent orchestration, Vanar asks: "What happens when agent #47 forgets what agent #3 told it?"

They're not stealing spotlight. They're anchoring to existing narratives with minimal words and maximum precision. It's parasitic in the best way possible—feeding off the attention of bigger players while positioning themselves as the necessary foundation .

The message is brutal and clear: Without this nail of mine, your cabinets will fall apart. It's only a matter of time.

The Price of Being Right (But Early)

Here's the uncomfortable part. This strategy has a side effect, and you can see it in the numbers.

VANRY trades around 0.007 to 0.01 . The market cap sits at roughly 14-15 million . While other AI tokens pump on narrative, Vanar quietly builds. They shifted to a subscription model in November 2025 . They're doing pilot integrations with natural language agents . They're planning quantum-resistant encryption for mid-2026 .

But for degens looking for quick flips? This is torture. No noise. No FOMO. No "announcement of an announcement" games.

For long-term capital, though, this is a dream. A team that's actually shipping—Neutron launched April 2025, Kayon is rolling out through 2026 —while the market ignores them because they're not sexy.

The recent AI infrastructure launch in January 2026 barely made waves in price action. The token is down 21% in the last month . The technical indicators all show bearish trends across daily, weekly, and monthly charts .