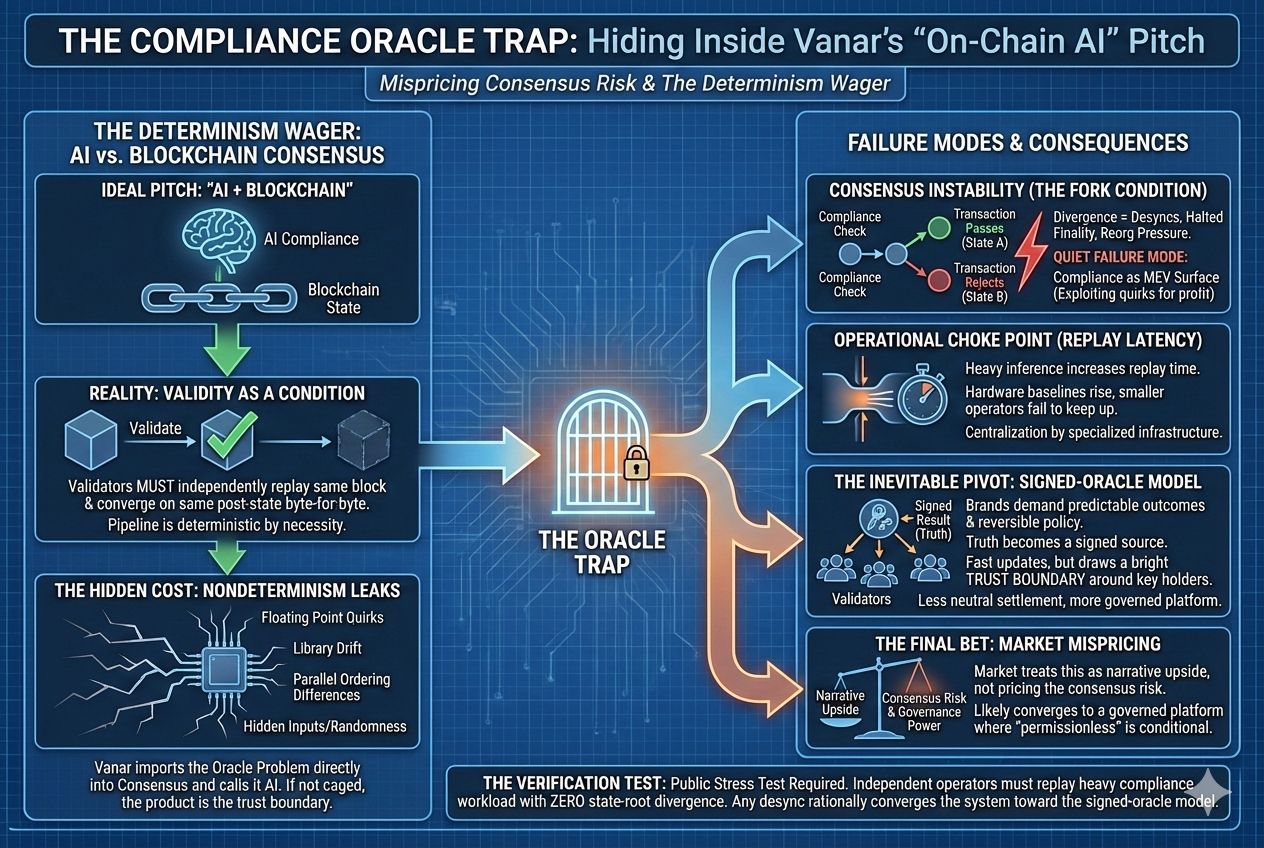

Vanar’s “on-chain AI compliance” only matters if it is actually part of the chain’s state transition, and that is exactly what I think is being mispriced. The moment compliance becomes a validity condition, the system stops being “AI plus blockchain” and becomes a determinism wager. Validators must independently replay the same block and converge on the same post-state every time, or Vanar is no longer a blockchain, it is a coordinated database with a consensus wrapper.

When I place a Kayon-style compliance call inside Vanar’s block replay, the stress point is immediate. Validity is not a vibe check, it is replay to the same state root across honest nodes. That pipeline is deterministic by necessity. Any compliance path that depends on floating point behavior, runtime variance, or hidden inputs is a fork condition waiting for load.

This only works if every client reproduces the compliance output byte-for-byte across independent nodes. Not “close enough,” not “same label most of the time,” not “within tolerance.” The hidden cost is that real inference stacks leak nondeterminism everywhere: floating point quirks across CPU architectures, instruction set differences, library implementation drift, ordering differences under parallelism, and the occasional “harmless” randomness that slips in through the back door. If the compliance check touches anything outside explicit transaction inputs and a pinned execution environment, Vanar has imported the oracle problem directly into consensus and called it AI.

Once validity depends on a compliance output, Vanar has to anchor truth somewhere. In practice, that truth is either a signed result that validators can verify cheaply, or a compliance computation constrained so hard that validators can deterministically replay it during verification. A signed source buys fast policy updates and brand-friendly control, but it draws a bright trust boundary around whoever holds the keys. A deterministic path preserves the consensus property, but it forces the AI component to behave like a rigid primitive: fixed model, fixed runtime, fixed quantization, fixed execution path, and a governance cadence slow enough to collide with product reality.

I keep coming back to the operational surface area: if Vanar makes validators run heavy inference inside block execution, replay latency becomes the choke point. Hardware baselines rise, propagation slows, and smaller operators start missing deadlines or voting on blocks they cannot fully reproduce in time. At that point the validator set does not compress because people lose interest, it compresses because being honest requires specialized infrastructure.

If Vanar avoids that by keeping inference off-chain, then the compliance decision arrives as an input. The chain can still enforce that deterministically, but the market should stop calling it on-chain AI in the strong sense. It is on-chain enforcement of an off-chain judgment. In that world, VANRY is not pricing a chain that solved compliance, it is pricing a chain that chose who decides compliance, how disputes are handled, and how quickly the rules can be changed.

The trade-off is sharper than people want to admit. If Vanar optimizes for real-world brands, they will demand reversible policy updates, emergency intervention, and predictable outcomes under ambiguity. Those demands push you toward an upgradeable compliance oracle with privileged keys, because brands do not want edge cases to brick their economy. The more privileged that layer becomes, the less credible Vanar is as neutral settlement, and the more it behaves like a governed platform where “permissionless” is conditional.

Where this breaks is ugly and specific: consensus instability via compliance divergence. Two validator clients execute the same block and disagree on whether a transaction passes a compliance check. One applies it, one rejects it. That is not a policy disagreement, it is a fork condition. Even if the chain does not visibly split, you get desyncs, halted finality, reorg pressure, and the kind of operational instability that makes consumer apps quietly back away.

There is also a quieter failure mode I would watch for: compliance as MEV surface. If the compliance layer can be influenced by ordering, timing, or any mutable external signal, sophisticated actors will treat it like a lever. They will route transactions to exploit pass fail boundaries, force enforcement outcomes that look like benign compliance, and manufacture edge cases that only a few searchers understand. The chain leaks value to whoever best models the compliance engine’s quirks, which is the opposite of adoption at scale.

I will upgrade my belief only when Vanar can run a public stress test where independent operators replay the same compliance-heavy workload and we see zero state-root divergence, zero client-specific drift, and no finality hiccups attributable to those paths. If we see even one meaningful desync tied to compliance execution, the system will rationally converge toward the signed-oracle model, because it is the only stable way to keep verification deterministic under pressure.

My bet is the market is underestimating how quickly this converges to the oracle model once real money and real brands show up. Deterministic consensus does not negotiate with probabilistic judgment. If Vanar truly pushes AI compliance into the execution layer, decentralization becomes a function of how tightly it can cage nondeterminism. If it does not, then the product is the trust boundary, not the AI. Either way, the mispricing is treating this as narrative upside instead of pricing the consensus risk and governance power that come with it.