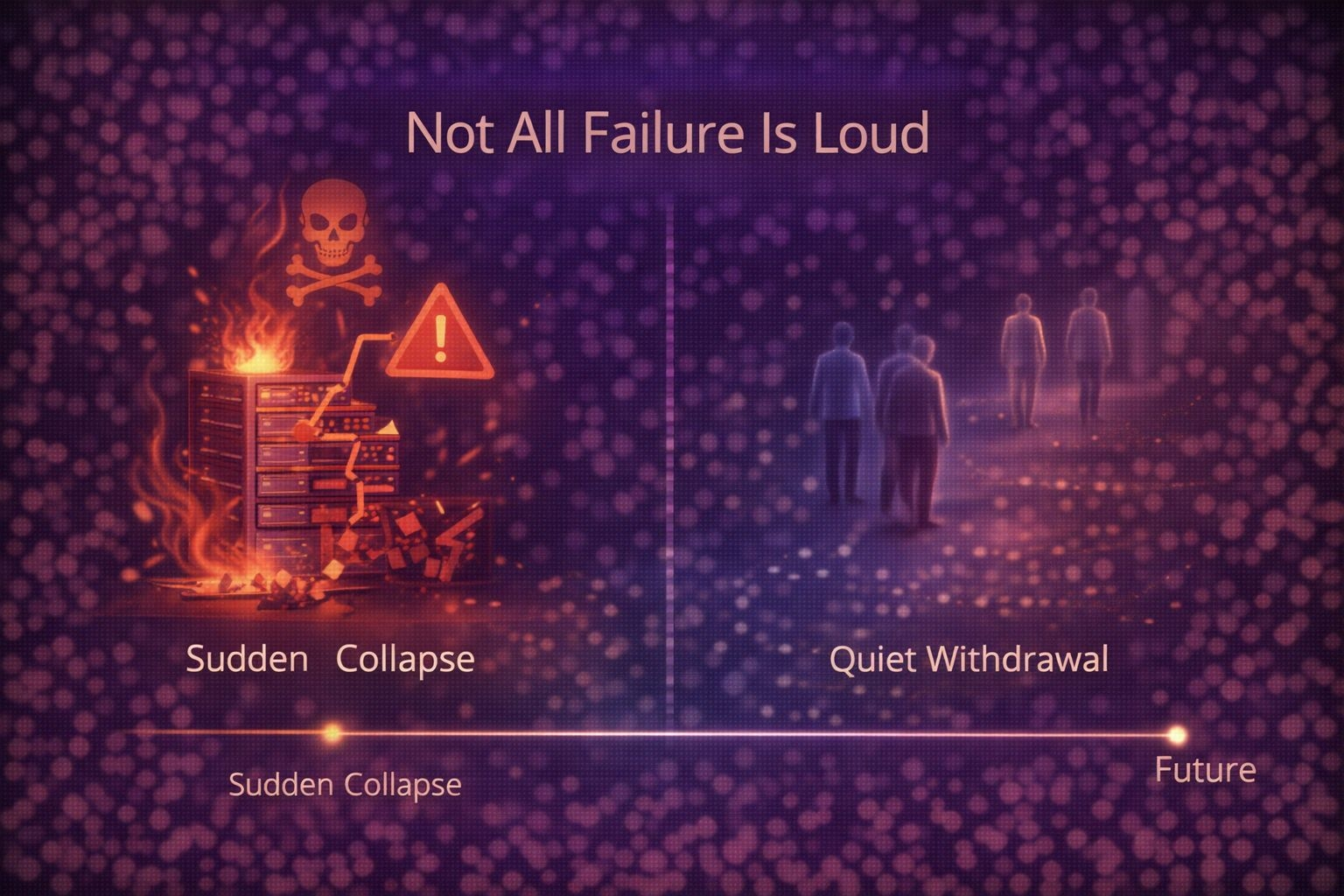

When people talk about failure in crypto, they usually mean something loud. A chain goes down. A bridge is exploited. Data disappears. Tokens crash. Failure is imagined as an event a moment you can point to on a chart or a timeline and say, “That’s where it broke.” But when I started thinking seriously about Walrus, it became clear that the most dangerous kind of failure doesn’t look like that at all. It looks quiet. It looks like people slowly walking away.

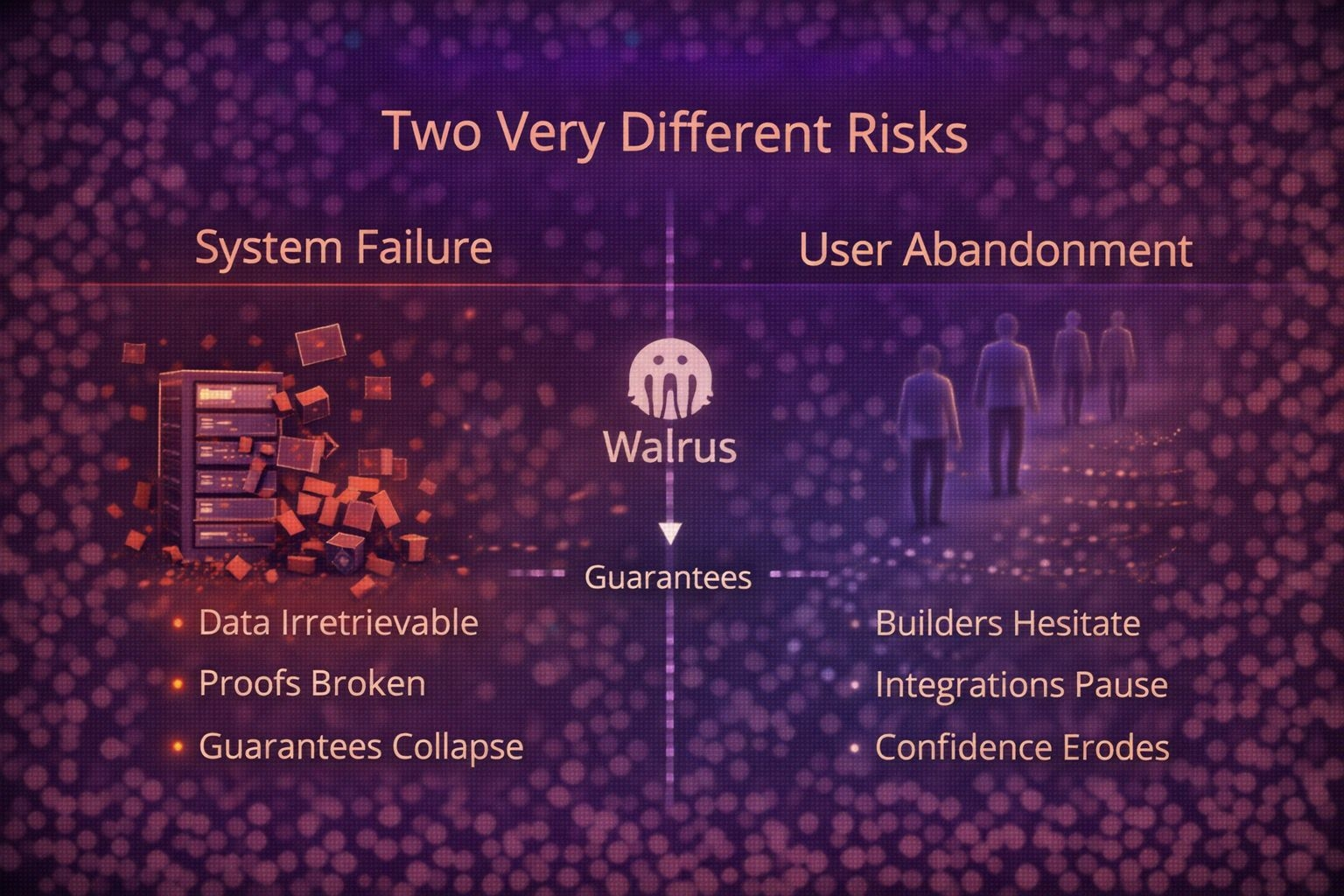

System failure and user abandonment often get lumped together, but they are not the same thing. A system can technically survive while its users lose confidence. And a system can suffer visible disruptions without actually failing at what it was designed to guarantee. Walrus sits right in the middle of that tension, and understanding the difference is crucial to understanding what success or failure really means for decentralized infrastructure.

A system failure is easy to define in theory. For Walrus, it would mean data becoming irretrievable, proofs breaking, or guarantees collapsing in a way that cannot be repaired. That kind of failure is catastrophic and unmistakable. The system would no longer be doing the one thing it exists to do. Everything else performance dips, node churn, uneven access patterns is secondary. Walrus is built with the assumption that chaos is normal and that the only unforgivable outcome is permanent loss of integrity.

User abandonment, on the other hand, is much harder to detect. It doesn’t come with alarms. There’s no single metric that screams “people have lost faith.” It happens gradually. Builders stop experimenting. Integrations get delayed. Conversations move elsewhere. The system might still be functioning exactly as designed, but it feels empty. And unlike a system failure, abandonment can’t be patched with code.

This distinction matters because Walrus was never designed to optimize for comfort or familiarity. It doesn’t promise smooth, predictable usage patterns. It doesn’t try to make data access feel gentle or human-friendly. It’s designed to remain correct under stress, not to reassure observers that everything looks fine. That’s a dangerous posture in an ecosystem where perception often matters more than guarantees.

From the outside, it’s easy to misinterpret this. When usage patterns look irregular or when activity doesn’t follow a clean growth curve, critics start asking whether something is wrong. Is the system unstable? Is adoption stalling? But those questions assume that healthy systems look busy and unhealthy ones look quiet. Walrus breaks that assumption. A spike in access could mean an AI system suddenly querying massive datasets. A drop in visible activity could mean nothing at all. The surface signals are unreliable by design.

This is where user abandonment becomes the real risk. Not because the system failed, but because people couldn’t tell whether it was succeeding.

Most users are conditioned to read health through responsiveness and familiarity. They expect clear dashboards, stable baselines, and narratives that explain what’s happening. Walrus doesn’t offer that kind of clarity. It offers invariants. Data is there or it isn’t. Proofs work or they don’t. Everything else lives in a gray area that requires trust in the design rather than comfort in the experience.

For builders, this creates a subtle challenge. If you’re building on Walrus, you’re not promised a calm environment. You’re promised a correct one. That’s a powerful guarantee, but it’s also an abstract one. When something feels uncomfortable when access patterns are strange or performance looks uneven it’s tempting to interpret that discomfort as failure, even if none of the core guarantees have been violated.

This is where abandonment can creep in. Not through dramatic exits, but through hesitation. Through projects deciding to “wait and see.” Through teams choosing environments that feel more predictable, even if they are less robust under real stress. Over time, that hesitation compounds. The system keeps working, but fewer people are around to notice.

The irony is that Walrus is likely to be most valuable precisely in the scenarios that feel the least reassuring. Machine-driven workloads don’t behave politely. AI agents, indexing systems, and autonomous processes don’t follow human rhythms. They generate bursts, gaps, and patterns that look broken if you expect smoothness. Walrus was built for that world. But most users are still thinking in human terms, judging success by how calm things appear.

This creates a mismatch between design intent and user expectation. Walrus expects unpredictability. Users expect signals. When those expectations collide, abandonment becomes possible even in the absence of failure.

What makes this particularly tricky is that abandonment doesn’t show up immediately. A system can lose momentum long before it loses function. By the time people agree that something “failed,” the damage is already done not to the code, but to confidence. And confidence is not something decentralized systems can easily reclaim once it’s gone.

At the same time, it’s important to recognize that Walrus’s design intentionally resists pandering to comfort. Adding artificial signals of health, smoothing over irregular behavior, or optimizing for optics would undermine the very philosophy the system is built on. Walrus doesn’t want to look stable; it wants to be invariant. That choice filters out certain users while attracting others those who care more about guarantees than appearances.

This suggests that the real question isn’t whether Walrus can avoid system failure. It’s whether it can communicate its definition of success clearly enough to prevent abandonment by misunderstanding. Not everyone needs to use Walrus. But the people who do need to understand that silence, irregularity, and lack of spectacle are not warning signs. They’re side effects of a system that refuses to assume how it will be used.

Over time, this may become Walrus’s quiet advantage. As decentralized infrastructure becomes more machine-oriented, fewer users will judge systems by how they feel and more by whether they hold under pressure. In that world, abandonment may become less likely, because the users who remain will be aligned with the system’s values from the start.

But until then, the gap between failure and abandonment remains a fragile space. Walrus can survive technical shocks. It’s built for that. The harder challenge is surviving interpretation ensuring that quiet operation isn’t mistaken for irrelevance, and that discomfort isn’t mistaken for collapse.

In the end, system failure is a binary outcome. Either the guarantees hold or they don’t. User abandonment is softer, slower, and far more human. It happens when expectations drift away from reality. Walrus sits at that boundary, daring users to rethink what success looks like when a system refuses to perform for reassurance.

The danger isn’t that Walrus will fail loudly. It’s that it will succeed quietly and that not everyone will know how to recognize the difference.