In crypto, forking has become almost invisible. It is so common that people rarely question it anymore. A new chain launches, the codebase looks familiar, parameters are tweaked, consensus is adjusted, and a new narrative is wrapped around it. Sometimes this approach works, especially when the goal is incremental improvement or faster experimentation. But there is a quiet cost to inheriting assumptions that were never designed for your problem in the first place.

VANAR exists because some problems cannot be solved by modifying someone else’s blueprint.

Most forked systems begin with a tradeoff that is already locked in. The original architecture was designed for a specific era, a specific use case, or a specific worldview. Even if the code is open, the mental model behind it is not easily changed. Developers end up working around constraints instead of questioning them. Over time, complexity accumulates, and systems grow brittle.

VANAR’s decision to build from the ground up reflects a different way of thinking. It starts by asking a more uncomfortable question: what if the assumptions behind most existing chains are no longer valid?

The answer becomes clearer when you look at how technology has shifted in the last few years. Execution is cheap. Compute is abundant. Models are powerful. Yet systems still feel fragile. Context disappears. Memory resets. Intelligence does not compound. Users repeat themselves. Builders rebuild the same logic across applications because infrastructure does not support continuity.

These problems are not bugs. They are symptoms of architectures that were never designed for persistent intelligence.

Forked chains inherit execution-first thinking. They optimize how fast transactions move, how cheaply state changes are recorded, and how deterministically systems behave. These are important qualities, but they assume that applications are mostly stateless and that intelligence lives outside the chain. That assumption breaks down once you expect systems to learn, adapt, and operate autonomously over time.

VANAR begins from a different premise. It assumes that intelligence is not an add-on. It assumes that memory, context, and reasoning are not application-level conveniences, but infrastructure-level requirements. Once you accept that premise, forking becomes less useful. You are no longer optimizing an existing design. You are redefining the foundation.

This is where the difference becomes structural rather than philosophical.

A fork allows you to change parameters. A ground-up design allows you to change relationships. VANAR was built to align how data, execution, and intelligence interact, not just how transactions are processed. Memory is not something bolted on through external databases. Context is not reconstructed through brittle logs. Reasoning is not simulated through repeated prompts. These elements are treated as native properties of the system.

That choice has cascading effects.

When memory is native, systems stop resetting. Agents do not lose identity between actions. Decisions can be traced back to intent rather than inferred after the fact. Builders no longer need to create their own persistence layers for every application. The infrastructure carries that burden.

Forked systems struggle here because memory was never part of their original threat model or trust model. They were built to minimize state complexity, not to preserve evolving context. Retrofitting this behavior often leads to fragmented solutions that work in isolation but fail under scale.

Context is another area where ground-up design matters. Context gives meaning to actions. Without it, systems behave mechanically. Forked architectures treat context as something external, something applications manage on their own. VANAR treats context as shared infrastructure. This allows agents and applications to operate coherently across tools and environments.

The impact of this design choice becomes obvious when you think about user experience. Most users do not care about chains. They care about whether systems remember them, whether actions feel consistent, and whether tools improve over time. UX suffers when infrastructure forgets. No amount of front-end polish can compensate for a system that resets its understanding every time.

By designing around continuity, VANAR improves UX indirectly but profoundly. Users stop re-explaining themselves. Builders stop re-implementing the same logic. Systems start to feel reliable rather than experimental.

Another reason VANAR could not simply fork is coordination.

Modern systems rarely live on one chain. They span multiple environments, tools, and execution layers. Forked chains often assume they are the center of the universe. Everything is designed to happen inside their boundaries. This makes integration harder, not easier.

VANAR assumes fragmentation as a starting condition. It does not try to replace base layers or execution environments. It positions itself as connective infrastructure that allows intelligence to persist across boundaries. This requires a different architecture than one built to maximize internal activity.

Forked designs often inherit governance and incentive models that assume homogeneity. VANAR’s design accepts diversity. Different execution environments. Different tools. Different workflows. The system is built to coordinate rather than dominate.

This coordination-first mindset also influences how value accrues.

In many forked ecosystems, value is extracted through activity. More transactions. More fees. More speculation. VANAR’s value proposition is different. Value accrues where intelligence compounds. Where systems become more useful over time. Where memory and reasoning reduce waste and error.

This is why $VANRY is not framed as a generic utility token. It coordinates participation across intelligence layers. It aligns incentives around persistence and reliability rather than raw throughput. Forked systems often struggle to reframe value because their token economics are deeply tied to execution metrics.

Building from the ground up also allowed VANAR to avoid legacy compromises.

Older chains made sense in an era where compute was expensive and AI was peripheral. Today, the opposite is true. AI is central, and compute is abundant. Systems need to be designed to manage intelligence responsibly, not just to execute instructions quickly. VANAR reflects this shift by embedding intelligence-supporting primitives into its core.

This does not mean VANAR rejects existing ecosystems. On the contrary, it is designed to work with them. But it does so without inheriting their constraints. It composes instead of copying.

The practical result is an infrastructure that feels different to use. Builders notice fewer edge cases. Agents behave more consistently. Systems scale in complexity without collapsing. These are not flashy improvements. They are the kinds of improvements that make infrastructure usable in the real world.

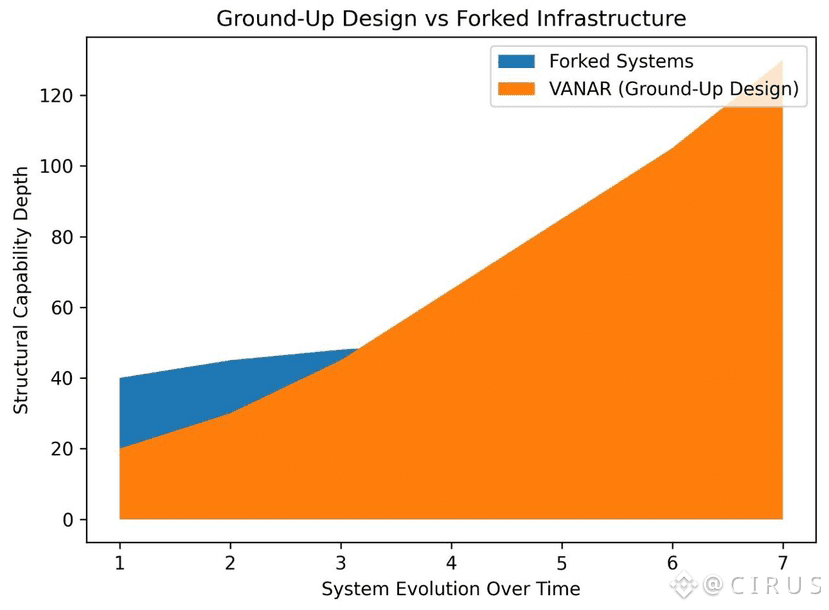

Forked systems often look impressive early on because they inherit maturity. Over time, their limitations surface. Ground-up systems start slower but age better. They are built for problems that did not exist when older architectures were conceived.

VANAR is betting on that trajectory.

It is not trying to win by being louder or faster out of the gate. It is trying to win by being structurally correct for the future it is targeting. A future where intelligence is persistent. Where agents operate autonomously. Where systems are trusted because they behave coherently over time.

My take is that this decision will only become more obvious as AI-driven systems mature. The infrastructure that supports them cannot be an afterthought. It must be intentional from the start. VANAR’s choice to build from the ground up reflects an understanding that some problems cannot be forked away. They must be designed for.

In the end, the difference is not ideological. It is practical. Forked systems optimize what already works. VANAR is optimizing for what has not worked yet, but needs to.