A few months back, I was testing a small automated trading bot that used basic AI signals to adjust positions. Nothing exotic. Just sentiment scoring and simple rule-based execution. The AI side worked fine on its own. The moment I tried wiring it into on-chain execution, things got messy. Data lived off-chain. Decisions happened somewhere else. Compliance checks meant manual intervention every few hours just to stay safe.

What bothered me wasn’t performance. It was the uncertainty. I couldn’t tell how this setup would look under real regulatory scrutiny. At some point, automated decisions start to resemble financial advice, and the line gets blurry fast. The UX reflected that tension. Extra checks. Extra gas. Extra doubt. It felt like I was one audit away from shutting the whole thing down.

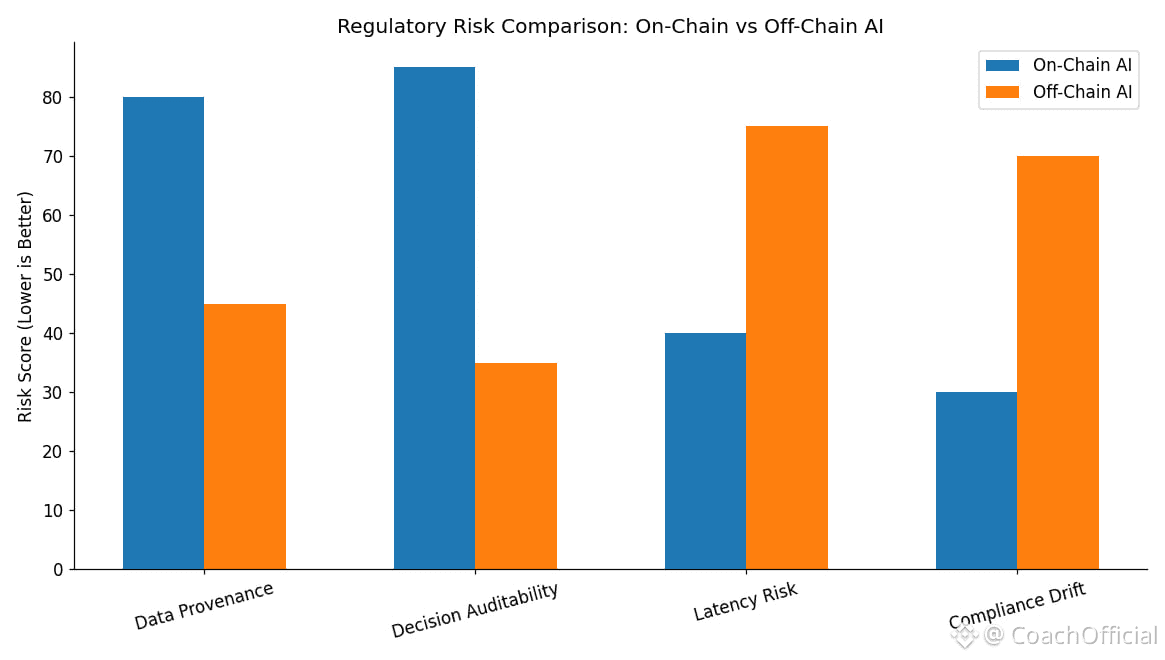

That’s the broader friction showing up as AI collides with Web3. Once AI is involved, questions around data provenance, decision traceability, and accountability stop being theoretical. Most stacks weren’t built for that. They bolt AI on top, push data off-chain, and hope no one asks hard questions. For developers, that means duplicated checks and fragile automations. For users, it means systems that work until they don’t. In finance or asset-heavy use cases, one unexplained decision can turn into a legal problem quickly.

It reminds me of retrofitting smart systems into old infrastructure. Sensors and automations look impressive, but the underlying wiring can’t handle the load. When regulators inspect, you’re forced into expensive rewrites. That’s the risk when intelligence isn’t native to the system it runs on.

#Vanar is clearly trying to avoid that trap by treating AI as a first-class primitive rather than an add-on. Instead of leaning on external oracles and off-chain logic, it embeds compression, memory, and reasoning directly into the chain. The goal isn’t raw speed. It’s predictability. That design choice became clearer after the full AI-native infrastructure launch in January 2026, which built on the earlier V23 upgrade that stabilized these components.

Two pieces matter most here. The first is Neutron. Raw inputs, like documents or metadata, get compressed into compact “Seeds” that can be queried on-chain without bloating storage. That matters for compliance because it preserves evidence without exposing raw data. The second is Kayon, the reasoning engine. It enforces execution constraints directly on-chain, meaning logic paths can be checked without relying on off-chain attestations. In recent tests, basic rule checks complete fast enough to be usable, but more importantly, they’re auditable.

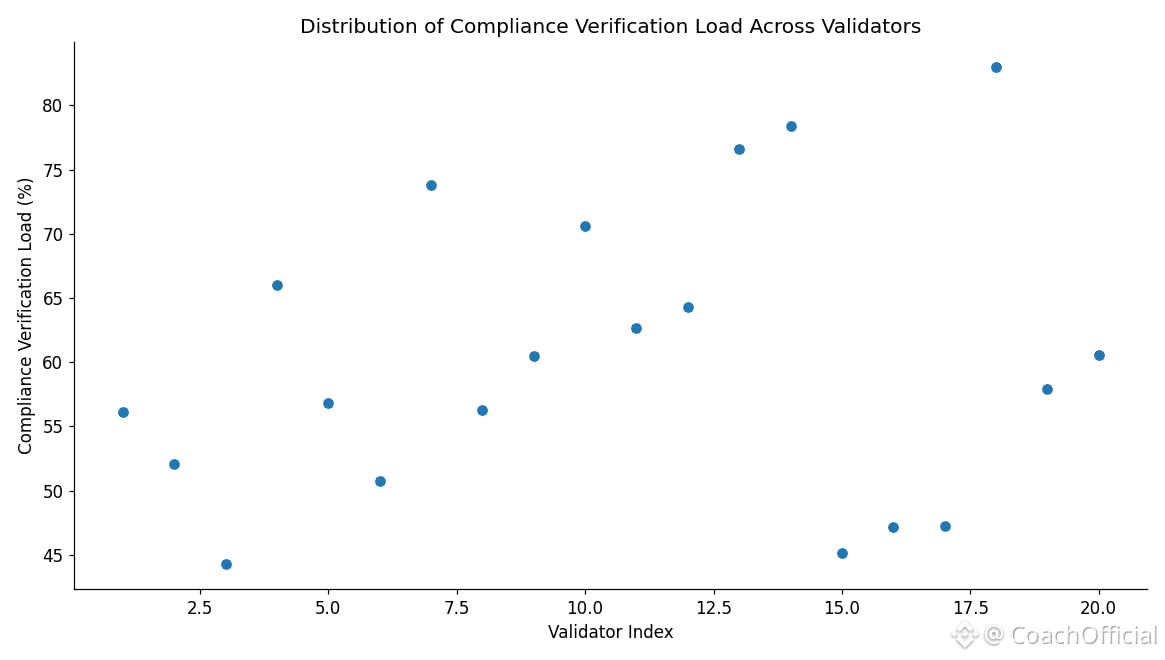

This setup helps reduce regulatory ambiguity, but it doesn’t eliminate it. $VANRY itself stays in the background, paying for execution, compression, and settlement. Burns scale with usage. Staking secures validators. Governance adjusts parameters like emissions and participation thresholds. None of that screams speculation, but regulators don’t always care about intent. They care about outcomes.

And that’s where the risk sits. AI systems that touch financial flows attract attention. Rules like the EU AI Act push toward explainability and auditability. Vanar’s architecture points in that direction, but it’s still early. If regulators decide on-chain reasoning needs formal certification or disclosures, parts of the stack may need rework. In the U.S., there’s another unresolved question: whether AI-driven execution could be framed as automated investment advice, and whether token mechanics tied to usage could be interpreted in ways the project didn’t anticipate.

There are also technical failure modes with regulatory consequences. If a Neutron compression error corrupts a Seed during high-volume asset processing, Kayon could validate the wrong state. That’s not just a bug. That’s a compliance incident. Settlements halt. Disputes follow. Trust erodes quickly in environments where “explain your decision” isn’t optional.

Market-wise, $VANRY remains small. Liquidity is modest. Price moves mostly follow narrative bursts around AI launches. That’s noise. The real question is slower and less visible. Can developers build systems that prove why an AI made a decision, without bolting on extra compliance layers? And can they do it consistently enough that regulators stop seeing AI-Web3 as experimental risk?

That answer won’t come from announcements. It’ll come from usage. From second and third deployments. From audits that pass without rewrites. If Vanar’s stack holds up under that pressure, it earns credibility quietly. If it doesn’t, regulation won’t wait for the roadmap to catch up.

@Vanarchain #Vanar $VANRY