A few months back, I was archiving some older trading datasets. Nothing fancy, just a few gigs of historical price data and model outputs that I use when I’m testing strategies or rerunning old ideas. I’d been keeping everything on centralized cloud storage, which works, but I wanted to move part of it somewhere decentralized so I wouldn’t be tied to one provider forever. In theory, it sounded simple. In practice, it wasn’t. One network ended up costing way more than I expected just to upload, and it took forever to finish. Another one claimed it was “highly reliable,” but a small network issue meant my data was inaccessible for hours. No disaster, but losing that time made the friction impossible to ignore.

The bigger problem is that decentralized storage still feels like it’s built more around principles than real usage. To guarantee availability, most systems crank redundancy way up, sometimes storing the same data ten times over. That gets expensive fast. And even with all that redundancy, things still slow down when the network is busy. If you’re dealing with AI datasets or large media files, you notice it immediately. Reads become inconsistent, pricing shifts with token volatility, and access controls feel bolted on instead of native. Instead of feeling like you’re storing something valuable, you’re constantly checking if it’s still there and if it’ll load when you need it.

It reminds me of a public library system spread across dozens of branches. On paper, it’s resilient. No single fire wipes out the collection. But if borrowing one book means coordinating across multiple locations, the experience gets frustrating fast. Redundancy only helps if access stays simple when demand rises.

@Walrus 🦭/acc takes a more focused path. It’s built specifically for large blobs like datasets, videos, or archives. Instead of extreme replication, it uses erasure coding so redundancy stays closer to four or five times, which keeps costs down while still protecting availability. Certification and coordination run through Sui, so applications can verify that data exists and is properly distributed without pulling the entire file. Programmability is a big part of the design too. Features like Seal, introduced in September 2025, let developers enforce access rules directly at the storage layer, which actually matters for AI training data or gated media. Smaller files aren’t handled individually; they’re bundled through Quilt so the system doesn’t get bloated. This isn’t theoretical either. Pudgy Penguins moved from one terabyte of assets to six, and projects like OpenGradient are already using it for permissioned model storage.

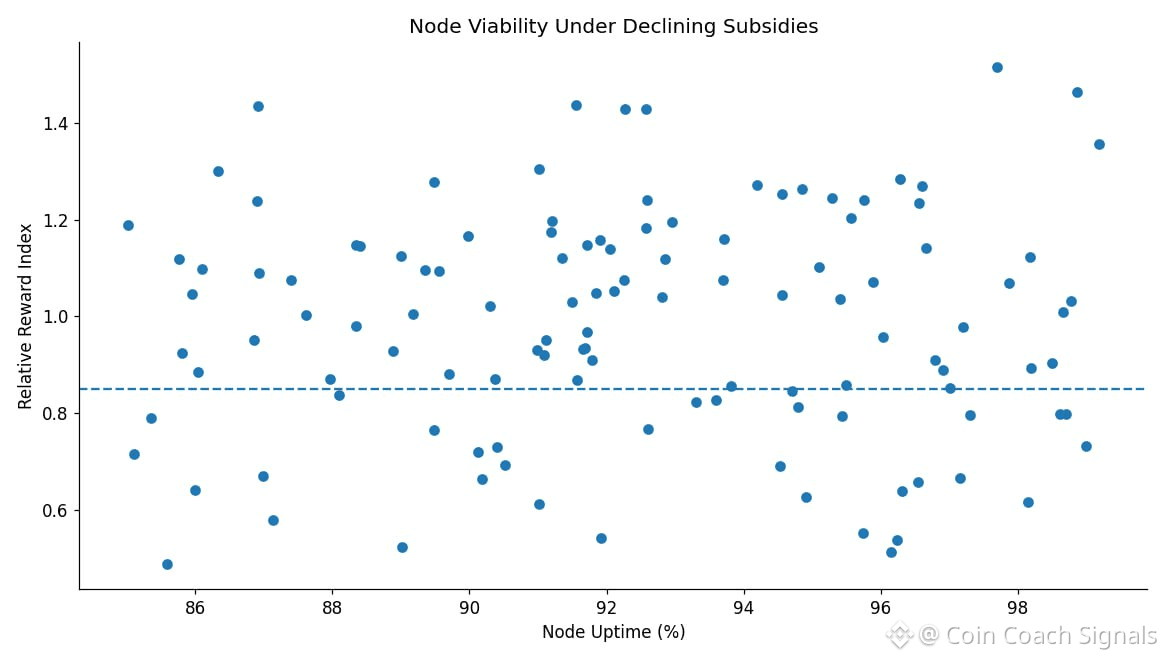

The $WAL token itself is pretty straightforward. It’s used to pay for storage, with pricing tuned to stay relatively stable in dollar terms rather than swinging wildly with market moves. Structurally, fees are distributed gradually to nodes and stakers instead of being paid out all at once. Staking tends to be delegated, helping decide which nodes store data, and rewards depend on performance, not just how much WAL someone locks up. The pattern is consistent. From a systems perspective, governance works through stake-weighted voting on parameters like penalties and upgrades, and slashing was phased in after mainnet to enforce uptime. This works because most of the value capture comes from usage and penalties, not from flashy burn mechanics. The behavior is predictable.

From a market perspective, supply matters more than narratives. #Walrus has a max supply of five billion tokens, while circulating supply is still well below that but steadily rising as unlocks continue. Ecosystem incentives, investor allocations, and community distributions all add supply over time. Market cap and volume show interest, but they also highlight that a lot of supply expansion is still ahead, not already absorbed.

Short-term price action usually reacts to unlocks and announcements. Partnerships can spark volatility, especially when new tokens hit the market. The community airdrop and subsidy releases made it clear how quickly sell pressure can show up when recipients decide to cash out. Watching only those moments, though, misses the bigger picture. Real value capture depends on whether storage demand becomes repeat behavior rather than one-off uploads tied to incentives.

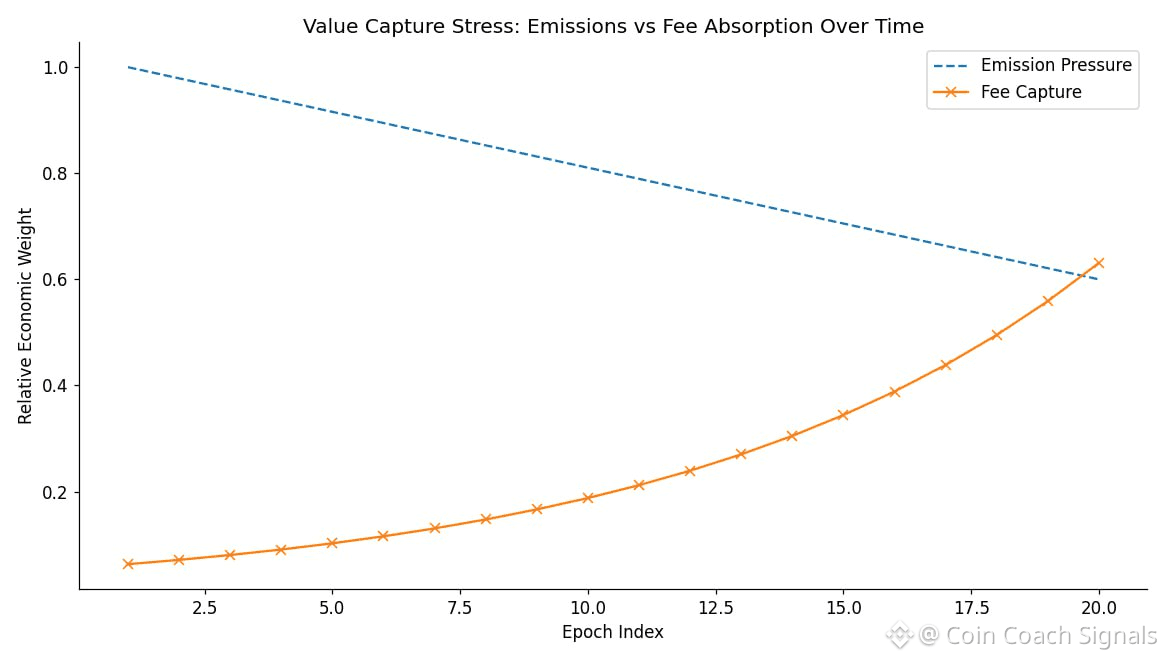

That’s where the real risk sits. If storage usage grows enough, fees and penalties can slowly offset emissions. If it doesn’t, node incentives weaken over time. Storage networks don’t usually fail overnight. They decay quietly. Retrievals take longer. Access becomes less reliable. Trust erodes before price reacts.

Walrus still has a clear path. The architecture is sensible, integrations are real, and programmable data is a genuine differentiator. But tokenomics don’t care about good intentions. They respond to sustained demand. Whether WAL captures long-term value depends less on announcements and more on whether builders keep paying for storage once incentives taper off.

That outcome won’t be decided by hype cycles. It shows up quietly, in second and third transactions, when teams come back because the system works without friction. Over time, that behavior determines whether circulating supply growth stays manageable or turns into a constant drag.

@Walrus 🦭/acc