A few months back, I was playing around with an on-chain agent meant to handle some basic portfolio alerts. Nothing fancy. Pull a few signals from oracles, watch for patterns, maybe trigger a swap if conditions lined up. I’d built versions of this on Ethereum layers before, so I figured it would be familiar territory. Then I tried layering in a bit of AI to make the logic less rigid. That’s where things started to fall apart. The chain itself had no real way to hold context. The agent couldn’t remember prior decisions without leaning on off-chain storage, which immediately added cost, latency, and failure points. Results became inconsistent. Sometimes it worked, sometimes it didn’t. As someone who’s traded infrastructure tokens since early cycles and watched plenty of “next-gen” layers fade out, it stopped me for a second. Why does intelligence still feel like an afterthought in these systems? Why does adding it always feel bolted on instead of native?

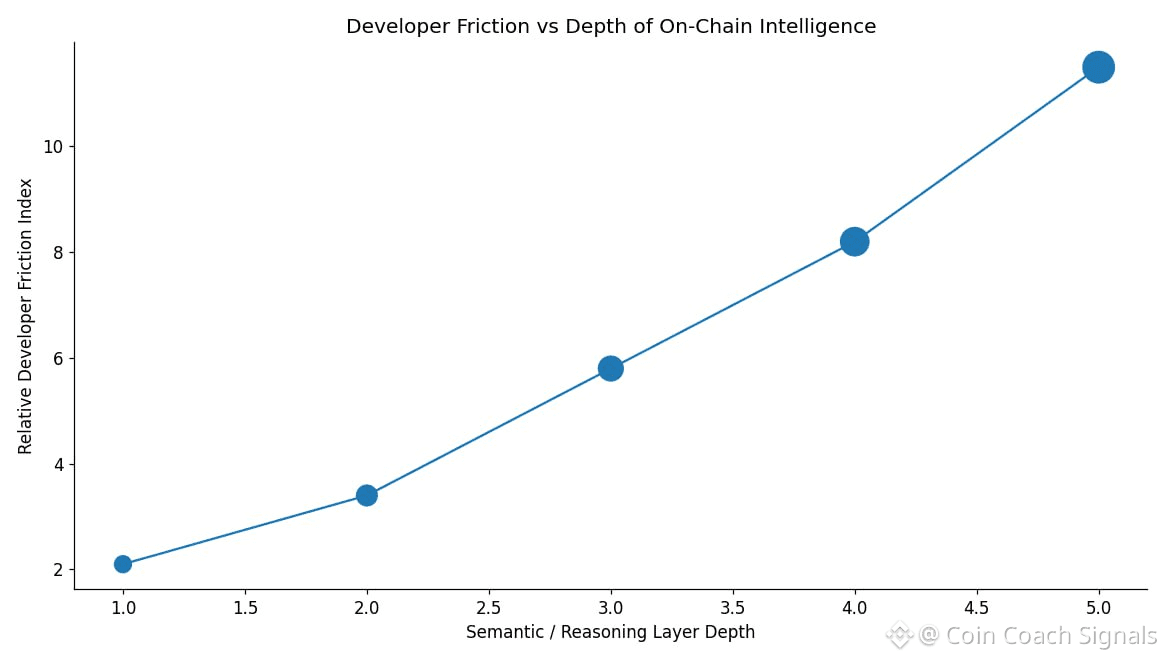

The problem isn’t just fees or throughput, even though those always get the headlines. It’s how blockchains fundamentally treat data. Most of them see it as inert. You write data. You read data. That’s it. There’s no built-in notion of context, history, or meaning that persists in a usable way. Once you want an application to reason over time, everything spills off-chain. Developers glue together APIs, external databases, inference services. Latency creeps in. Costs rise. Things break at the seams. And for users, the experience degrades fast. Apps forget what happened yesterday. You re-enter preferences. You re-authorize flows. Instead of feeling intelligent, the software feels forgetful. That friction is subtle, but it’s enough to keep most “smart” applications stuck in demo territory instead of daily use.

I keep coming back to the image of an old filing cabinet. You can shove documents into drawers all day long, but without structure, tags, or links, you’re just storing paper. Every time you want insight, you dump everything out and start over. That’s fine for archiving. It’s terrible for work that builds over time. Most blockchains still operate like that cabinet. Data goes in. Context never really comes back out.

That’s what led me to look more closely at @Vanarchain . The pitch is simple on the surface but heavy underneath. Treat AI as a first-class citizen instead of an add-on. Don’t try to be everything. Stay EVM-compatible so developers aren’t locked out, but layer intelligence into the stack itself. The goal isn’t raw throughput or flashy metrics. It’s making data usable while it lives on-chain. In theory, that means fewer external dependencies and less duct tape holding apps together. In practice, it turns the chain into more of a toolkit than a blank canvas. You still get execution, but you also get semantic compression and on-chain reasoning primitives that applications can tap into directly, which matters if decisions need to be traceable later, like in payments, compliance, or asset workflows.

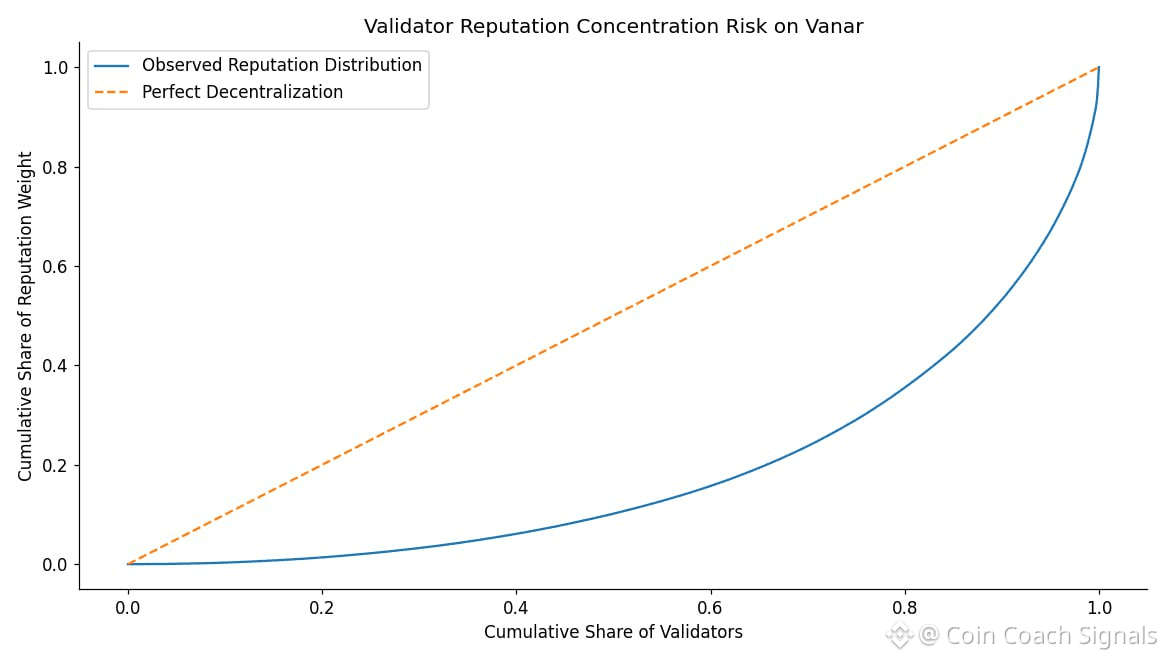

The V23 protocol upgrade in early January 2026 was one of the more tangible steps in that direction. Validator count jumped roughly 35 percent to around eighteen thousand, which helped decentralization without blowing up block times. Explorer data still shows blocks landing anywhere between three and nine seconds, which is slow compared to pure speed chains but consistent enough for stateful logic. One important detail is how consensus works. It blends Proof of Authority with Proof of Reputation. Validators are selected based not just on stake, but on historical behavior.

That sacrifices some permissionlessness, but it buys predictability, which becomes more important once you’re running logic-heavy applications. Total transactions crossing forty-four million tells you the chain is being used, even if that usage is uneven.

Then there’s Neutron. This is where things get interesting and risky at the same time. Raw data gets compressed into what they call “Seeds” using neural techniques. Those Seeds stay queryable without decompressing the whole payload. Storage costs drop. Context stays accessible. Apps can reason without dragging massive datasets around. That’s a meaningful improvement over dumping blobs into contracts. It also means developers have to adapt their thinking. This is not plug-and-play Solidity anymore. You’re building around a modular intelligence layer, and that’s a learning curve many teams may not want to climb.

$VANRY itself stays out of the spotlight. It pays for transactions. Validators stake it to participate in block production. Reputation affects rewards. Slashing exists for bad behavior. Governance proposals flow through token holders, including recent changes to emission parameters. There’s nothing exotic here. Emissions fund growth. Security incentives try to keep validators honest. It’s plumbing, not narrative fuel.

Market-wise, the picture is muted. Circulating supply sits near 1.96 billion tokens. Market cap hovers around fourteen million dollars as of late January 2026. Daily volume is thin, usually a few million at most. Liquidity exists, but it’s shallow. Outside of announcements, price discovery is fragile.

Short-term trading mostly tracks hype cycles. AI headlines. Partnership announcements. The Worldpay agentic payments news in December 2025 briefly woke the market up. Hiring announcements did the same for a moment. Then attention drifted. That pattern is familiar. You can trade those waves if you’re quick, but they fade fast.

Long-term value, if it shows up at all, depends on whether developers actually rely on things like Kayon and Neutron in production. If teams start building workflows that genuinely need on-chain memory and reasoning, fees and staking demand follow naturally. But that kind of habit formation is slow. It doesn’t show up in daily candles.

There are real risks sitting under the surface. Bittensor already owns mindshare in decentralized AI. Ethereum keeps absorbing new primitives through layers and tooling. Vanar’s modular approach could be too foreign for many developers. Usage metrics back that concern up. Network utilization is close to zero percent.

Only about 1.68 million wallets exist despite tens of millions of transactions, suggesting activity is narrow and concentrated. One scenario that’s hard to ignore is governance capture. If a group of high-reputation validators coordinates during a high-impact event, block production could skew. Settlements slow. Trust erodes. Hybrid systems always carry that risk.

And then there’s the biggest unknown. Will semantic memory actually matter enough to pull developers away from centralized clouds they already trust? Seeds and on-chain reasoning sound powerful, but power alone doesn’t drive adoption. Convenience does. Unless these tools save time, money, or risk in a way that’s obvious, many teams will stick with what they know.

Looking at it from a distance, this feels like one of those infrastructure bets that only proves itself quietly. The second use. The third. The moment when a developer doesn’t think about alternatives because the tool already fits. #Vanar is trying to move from primitives to products. Whether that jump lands or stalls is something only time and repeated usage will answer.

@Vanarchain