A few months back, I was trying to store some fairly large datasets for a trading bot I’d been tinkering with. Mostly historical price data and a couple of ML models. Nothing exotic, but each file was heavy, around 500MB. I was juggling between centralized cloud storage and a few decentralized options, and honestly, it was more annoying than it should have been. Uploads would stall if the network got busy. Retrieval times felt random depending on the hour. And the costs were hard to pin down, especially when fees were tied to tokens swinging all over the place. Having spent years trading infrastructure tokens and even running nodes on some chains, it stood out to me how clunky something as basic as storage still feels in crypto, in an ecosystem that loves to talk about efficiency.

That experience pushed me to think about the bigger problem with data storage in blockchain systems. Most networks still treat data like a secondary concern, bundling it together with execution and state. That works fine for tiny contract variables, but falls apart fast when you deal with images, videos, or AI datasets. Developers end up jumping through hoops, offloading content to centralized servers just to keep costs sane, which quietly reintroduces the same failure points crypto is supposed to avoid. Users feel it too. Apps load slowly. Links break. Models fail when nodes drop off. None of this is dramatic on its own, but it adds friction everywhere. Latency makes people impatient, and unpredictable pricing makes planning hard, especially once a project starts to scale.

It reminds me a lot of the early internet days. You’d kick off a big download over dial-up, walk away, and come back to find it failed halfway through because someone picked up the phone. No smart retries. No redundancy. Just wasted time. Things didn’t really improve until systems were designed around failure instead of pretending it wouldn’t happen.

That’s what originally made #Walrus stand out to me. It’s not trying to be another full blockchain. It sits on top of Sui and focuses narrowly on storing and serving large blobs of data. No smart contract playground. No execution layer bloat. Just verifiable storage and retrieval. That narrow focus matters. Builders don’t have to reinvent storage logic or worry about whether files will still exist when users need them. For things like AI agents, games, or media-heavy apps, persistence isn’t optional. Walrus seems built around that assumption instead of bolting it on later.

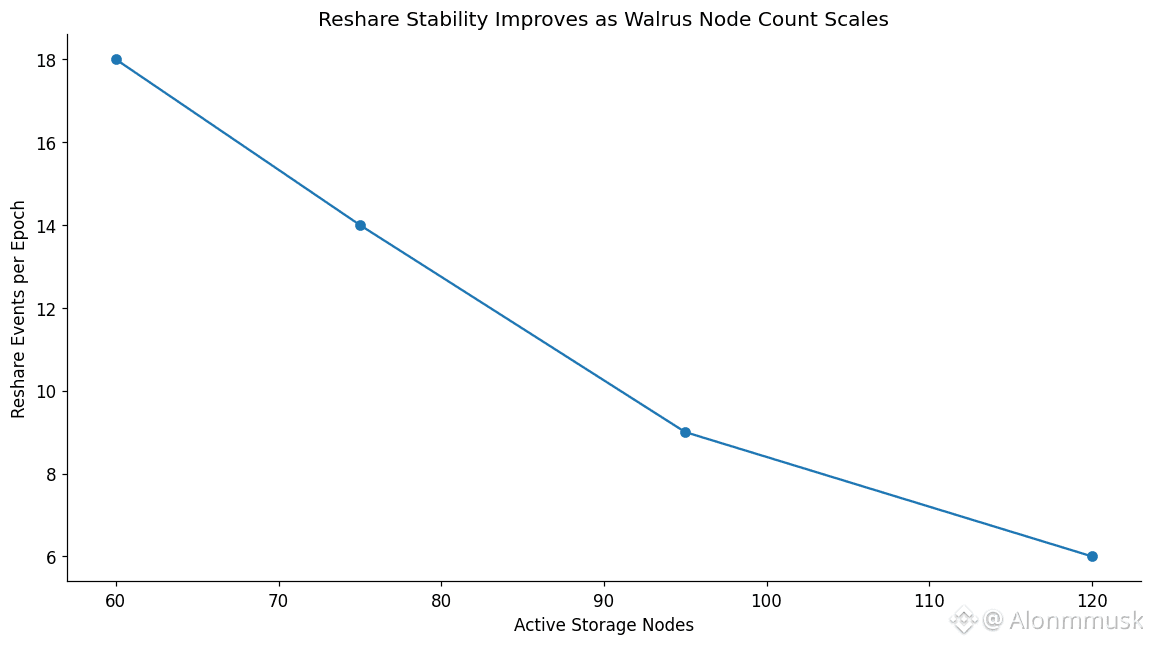

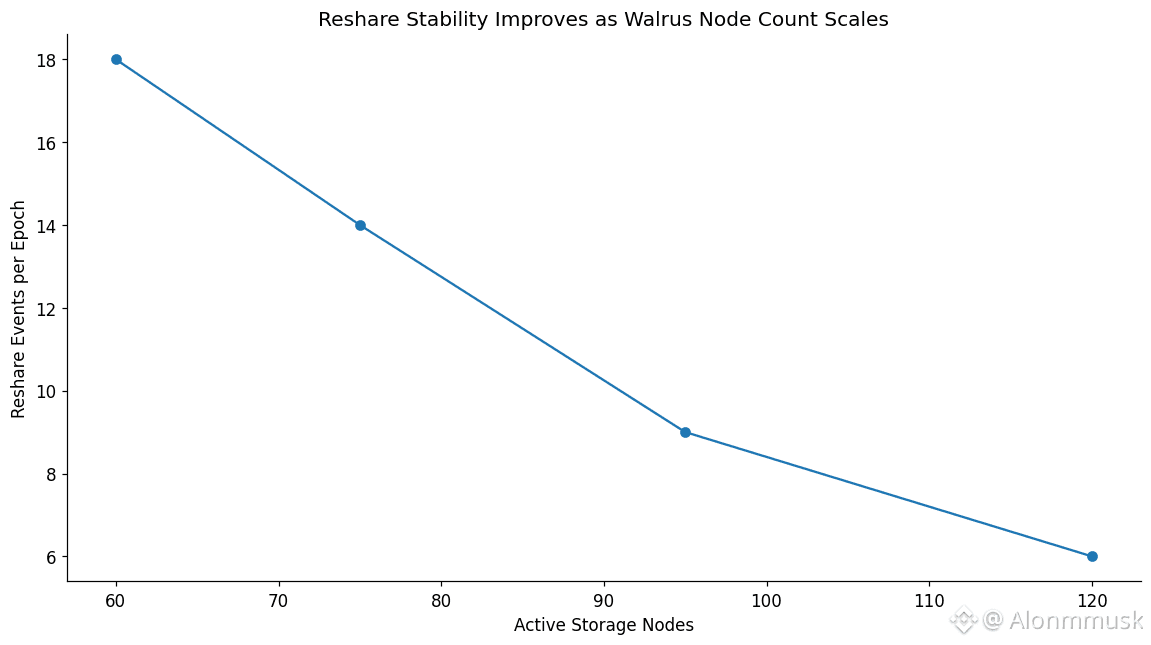

One of the more practical design choices is how it handles redundancy. Instead of brute-force replication, it uses erasure coding. A file gets broken into fragments with extra parity data, so you don’t need every piece to reconstruct it. Lose some nodes, the data still comes back. Based on their late-2025 reports, this approach slashed storage overhead compared to full replication. There’s also the reshare process, which quietly redistributes data as nodes come and go. That went live around mainnet and helps keep availability stable without users needing to babysit their uploads. The trade-off is more computation upfront, but the payoff is lower long-term costs and fewer nasty surprises. Recent metrics showing sub-two-second retrieval for 100MB blobs line up with that design choice actually working in practice.

$WAL , the token, doesn’t pretend to be anything fancy. You use it to pay for storage. Fees are structured around size and duration, with an attempt to keep pricing stable in real terms instead of letting volatility wreck planning. Node operators stake WAL to participate, earning from fees and emissions in return for serving data reliably. Commitments ultimately settle through Sui’s consensus, with WAL tied into that process. Governance exists too, but it’s focused on operational knobs like capacity limits or fee tuning, not abstract signaling. When usage spiked late last year, a chunk of fees got burned, which helped link real activity to supply dynamics instead of hype.

From a market angle, it’s not tiny, but it’s not frothy either. Around a $190-ish million cap in mid-January, daily volume in the low teens. Enough liquidity to move, not enough to ignore fundamentals entirely. On-chain metrics tell a more interesting story. Node counts are climbing. Uploads are up meaningfully since Q4. That’s the kind of growth that doesn’t show up in a single candle but matters over time.

Trading it short term feels familiar. Mainnet news, AI narratives, partnerships. You get sharp moves, then pullbacks. I’ve seen plenty of people get chopped trying to chase those. The longer-term question is whether Walrus becomes default infrastructure inside the Sui ecosystem. If apps start assuming Walrus is there, demand becomes habitual rather than speculative. The January 2026 capacity expansion helped there, increasing throughput without blowing out fees. That kind of quiet scaling matters more than headline announcements.

The risks are still very real. Filecoin has scale. Arweave owns the permanence narrative. #Walrus is betting on speed, programmability, and tight integration with Sui, but cross-chain usage is still early. Regulatory questions around data storage, especially for AI workloads, haven’t gone away either. The failure scenario that worries me most isn’t some exotic attack. It’s something mundane, like a large chunk of nodes leaving during a reshare cycle after a market shock. Even temporary retrieval failures could spook builders relying on high-value data, and trust is hard to rebuild once it cracks.

Stepping back, storage layers prove themselves quietly. The second retrieval that works. The tenth upload you don’t think about. The moment data just shows up when it’s needed. With Q1 upgrades targeting major capacity gains and Q2 aiming at better interoperability, Walrus has a clear path laid out. Whether it sticks comes down to execution and time. This is the kind of infrastructure that earns relevance slowly, through repetition, not headlines.

@Walrus 🦭/acc #Walrus $WAL