How STON.fi turned grants into a distributed testing framework (featuring Omniston, TON) — read the full interview on CoinEdition

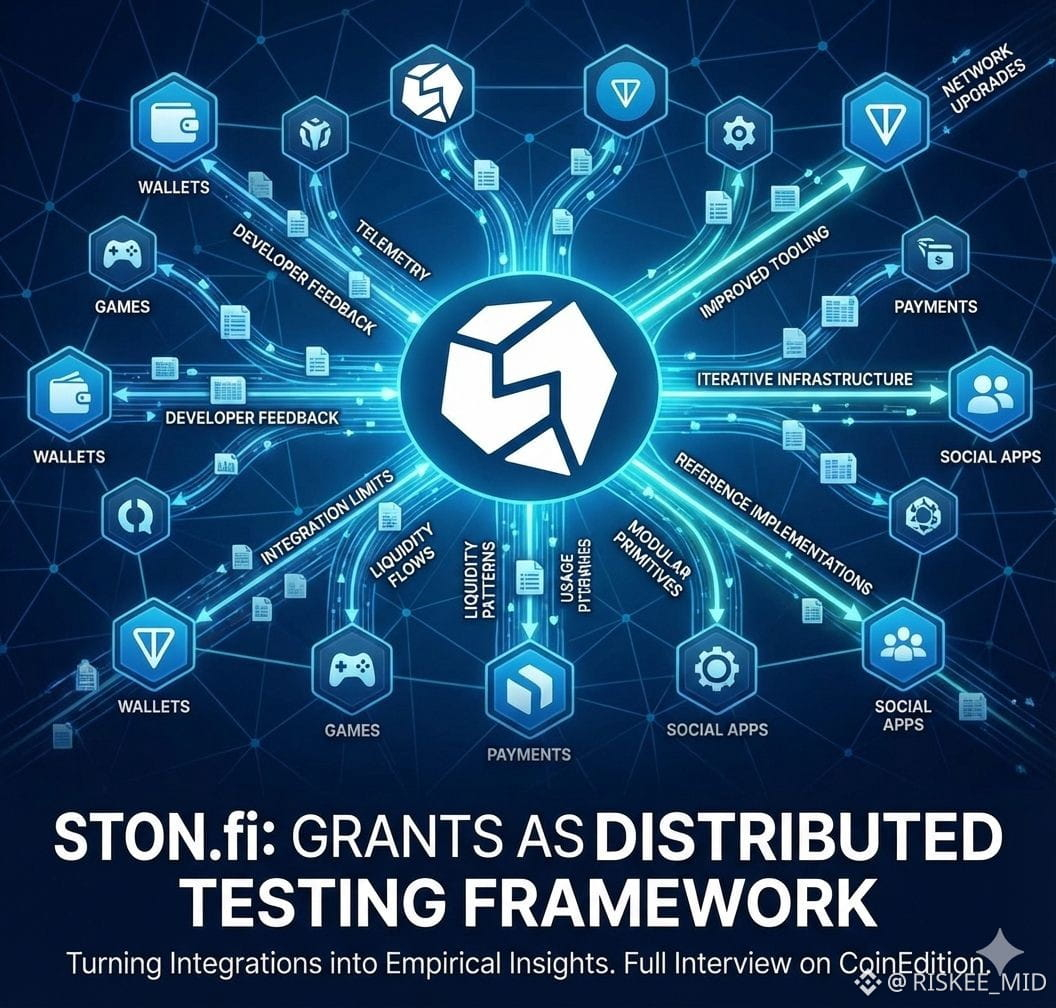

Ecosystem funding is usually pitched as a set of incentives: give teams money, expect new features, liquidity, and users. The STON.fi approach reframes that model. Rather than treating grants as one-way rewards, STON.fi uses them as an instrument for distributed, real-world testing — a deliberate, low-friction way to discover what the platform actually needs by watching how external builders use it.

Grants as distributed experiments

Instead of centralizing product roadmaps or guessing future demand, STON.fi funds scores of TON-native teams across wallets, games, payments, and social apps. Each grantee effectively becomes a live experiment. When teams integrate STON.fi’s tooling and liquidity primitives, they subject the system to a wide range of usage patterns that internal QA and lab tests cannot reproduce: bursty micro-payments from games, cross-wallet routing under realistic latency, or complex multi-party payment flows inside social apps.

Those integrations expose concrete limits — routing edge-cases, shallow liquidity in certain corridors, and missing developer ergonomics — which are far more valuable than hypothetical feature lists. The grant becomes the mechanism for discovery, and every integration yields empirical telemetry and developer feedback.

Continuous feedback, iterative infrastructure

This isn’t just one-off insight collection. The pattern creates a feedback loop: builders surface friction; infrastructure teams adapt; improved tooling lowers onboarding costs; more builders join. Over repeated cycles, the system matures organically.

Because changes respond to observed needs rather than product speculation, STON.fi avoids over-engineering. Instead of building large, risky features no one uses, the project can prioritize incremental improvements that demonstrably unblock real teams. The result is faster value capture for users and more efficient engineering spend.

Decentralizing innovation and risk

Grants also shift where experimentation happens. Rather than concentrating R&D inside a single team, STON.fi distributes bets across the ecosystem. This diversification achieves two important outcomes:

It spreads technological risk — not every experiment needs to succeed to surface useful lessons.

It creates a library of reference implementations: the simplest integrations that worked in production become templates other teams can copy or extend.

Those reference patterns speed future integrations and reduce the cost of composing new applications on top of the network.

Liquidity, composability, and network effects

As teams adopt common infrastructure standards, integration costs decline and composability improves. Routing and liquidity issues identified during early experiments can be addressed at the protocol or tooling layer, making subsequent integrations easier and more robust. Over time, these incremental gains compound: deeper liquidity attracts more users and integrators, which in turn spawns more innovation — a virtuous cycle that is emergent rather than centrally planned.

Importantly, this growth is measured in practical metrics: reduced integration time, fewer support tickets, increased transaction success rates, and measurable increases in on-chain liquidity depth for commonly used token pairs.

Practical advantages over classic grants programs

Traditional grant programs have value, but they often suffer from two common weaknesses: they either fund noise (projects that never ship) or they attempt to direct development through top-down feature mandates. The distributed testing approach minimizes both problems by:

Favoring short, targeted grants tied to integration milestones, which encourage shipping and produce quick learnings.

Using grantee telemetry and qualitative developer feedback to inform where platform investment yields the largest ROI.

Scaling improvements horizontally — tooling and primitives that help one team will likely help dozens.

Risks and mitigations

No approach is risk-free. Distributed experiments can generate fragmentation if integrations diverge wildly, or create coordination overhead if many teams require bespoke solutions. STON.fi mitigates these risks by prioritizing modular primitives, publishing reference implementations, and maintaining clear developer documentation and SDKs. Grants that produce reusable components receive additional amplification, accelerating standardization.

The long game: collective learning over single upgrades

The notable insight from this strategy is that collective, iterative learning can be more valuable than any single product upgrade. By letting the ecosystem reveal its pain points, STON.fi channels scarce engineering capacity toward the problems that actually limit adoption. Over time, as more applications converge on shared infrastructure and standards, TON’s overall composability and developer velocity improve — a network-level upgrade that grows from many small, well-observed changes rather than a few large releases.

STON.fi’s grants-as-testing-framework is an elegant inversion of the usual funding playbook: funding becomes a discovery engine, and the ecosystem — not a central product roadmap — signals what matters. For a deeper, on-the-record walkthrough of this strategy, Read full interview here: coinedition.com/inside-ston-fi...