I once watched a matching engine start misbehaving in the most unsettling way, not with a crash or an exploit or the kind of clean, cinematic failure you can summarize in a post-mortem, but with a quiet loss of rhythm that made the whole system feel unreliable even while every dashboard insisted it was fine. The numbers still printed, the checks still passed, and the outputs still looked “correct,” yet the behavior had become soft at the edges, because a few milliseconds of jitter in the wrong places is enough to turn timing assumptions into wishful thinking, especially when packets arrive out of order and processes drift just far enough apart that synchronization stops feeling like a guarantee.

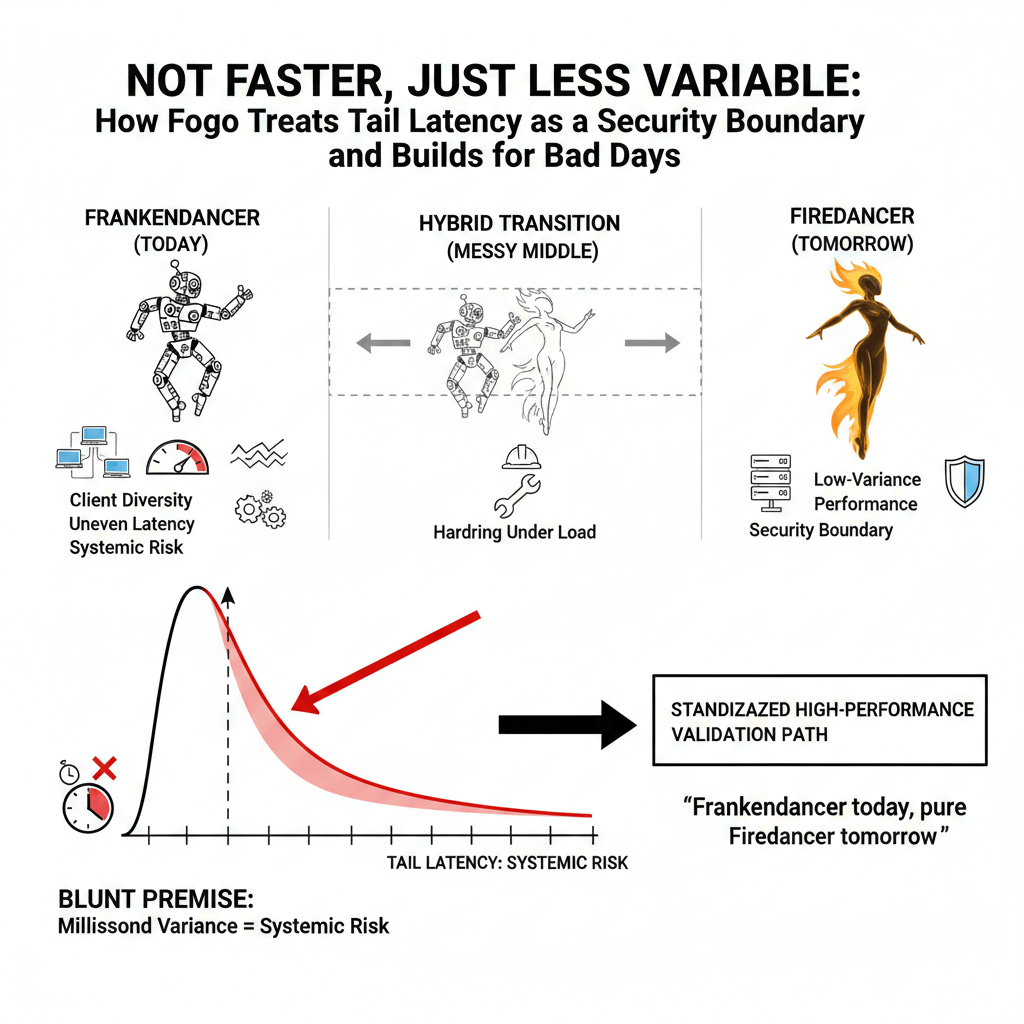

That memory is why I keep coming back to Fogo, not because it promises speed—every chain promises speed when it needs attention—but because it treats latency as a constraint that reshapes the entire architecture rather than a statistic you throw on a banner. And that’s why the line “Frankendancer today, pure Firedancer tomorrow” keeps sticking in my head, because it doesn’t try to sell inevitability or pretend the path is clean; it admits the awkward middle exists, it admits the system has to operate through that middle while real users and real value sit on top of it, and it quietly signals something many projects refuse to say out loud: infrastructure doesn’t jump from theory to perfection, it survives a messy transition and hardens under load until it either becomes dependable or breaks in public.

Fogo’s approach reads like it begins from one blunt premise: the moment you push block cadence into tens of milliseconds, variance stops being a minor inconvenience and starts behaving like a systemic risk, because the tail is no longer a statistical curiosity but the part of the distribution that defines what the network feels like under pressure. In that world, client diversity may still be philosophically appealing, but it also becomes a real source of friction, because heterogeneity introduces uneven performance profiles, uneven latency behavior, and uneven failure patterns, which is exactly the kind of unpredictability you end up paying for when the system is running close to its physical limits. So the “Frankendancer first, full Firedancer later” choice doesn’t land as a branding detail to me, but as a practical confession that they intend to standardize around a high-performance validation path, that they know they can’t skip the hybrid stage, and that they are willing to let the chain live through an imperfect but runnable middle phase before claiming the final form.

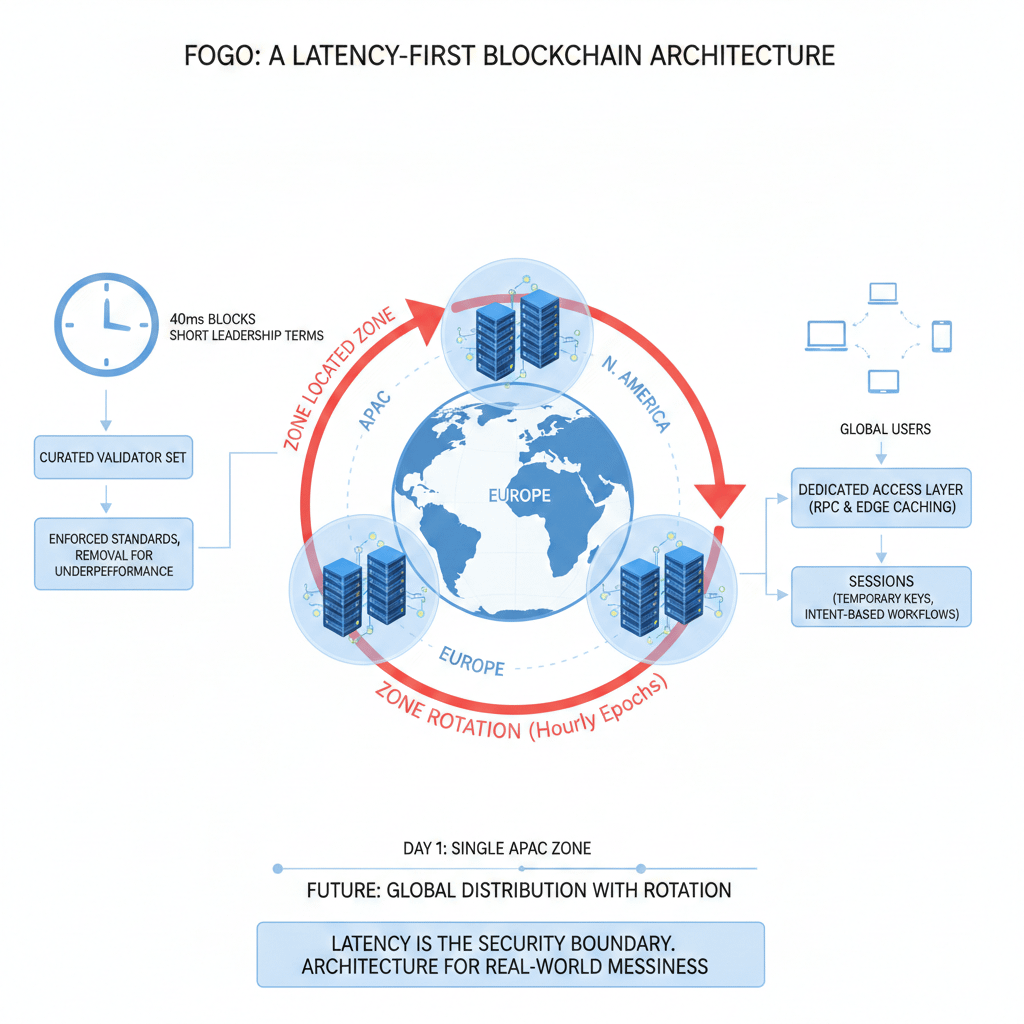

Once you accept latency as the primary constraint, it becomes harder to keep pretending geography is a background detail, because distance dominates consensus more ruthlessly than any code optimization ever will, and global distribution without a plan quietly turns into global delay. That is where the zones concept feels unusually grounded, because it takes the simplest truth in networking—signals take time to travel—and treats it as something the protocol should acknowledge rather than something operators should suffer through. Co-locating validators tightly, ideally within the same data center, is not a cute trick so much as a way to compress the time consensus messages spend traveling, so the network’s behavior becomes limited more by computation and coordination than by the speed of light and the mess of the public internet.

But what makes the idea feel more like an architecture than a shortcut is the insistence that co-location cannot become a permanent anchor, because permanent anchors turn into permanent jurisdictions, permanent dependencies, and permanent centers of power. That is why rotation matters, because moving the active zone across regions over time is the only way to keep a low-latency design from becoming structurally tied to one legal regime, one infrastructure cluster, and one set of assumptions about who gets to sit closest to the heart of consensus. In that sense, decentralization stops being a static node count and starts looking like a time-based strategy, where the question is not only “how many validators exist,” but also “where does the system live this epoch, where does it live next epoch, and how does it prevent its fastest configuration from becoming its most capturable configuration.”

The test configuration makes that intent feel less theoretical, because it doesn’t just gesture at speed, it hard-codes an aggressive operational rhythm with a target of 40ms blocks, short leadership terms measured in seconds, and hour-long epochs that deliberately move consensus between regions such as APAC, Europe, and North America. That cadence reads like a system that wants to learn, early and repeatedly, what breaks when you combine ultra-tight timing with real-world geography and forced relocation, because if a network is going to claim it can manage jurisdictional spread while staying performance-tight, it has to demonstrate that the relocation itself doesn’t become the hidden tax that ruins everything under stress.

Mainnet reality, though, is where the “messy middle” shows up again in a way that feels honest rather than contradictory, because the network is documented as running with a single active zone in APAC while publishing entrypoints and validator identities, which is exactly the sort of starting posture you would expect from something that is trying to behave like infrastructure rather than theater. If you’re serious about stability, you don’t introduce every moving part on day one; you stabilize a baseline, you prove it can carry load without wobbling, and then you widen the surface area of complexity only after the simplest version can survive the kind of market day that turns most chains into delayed, inconsistent, half-working machines.

Then there is the part that most people instinctively resist, yet it is the part that makes the most operational sense once you commit to a latency-first design: the curated validator set. The word “curated” immediately feels like a step backward because it sounds like a gate, but the underlying reason is brutally simple, because in an ultra-low latency system weak participants don’t only degrade their own experience, they impose externalities on everyone else by inflating the tail, widening variance, and becoming the drag coefficient that defines the network’s ceiling. If your ambition is tens of milliseconds, you cannot treat validator performance like an individual hobby or a private preference; either you enforce standards and remove persistent underperformance, or you accept that the slowest honest participants will define what the system can be, no matter how fast the best operators are.

What’s uncomfortable, and also quietly true, is that many proof-of-stake systems already operate with effective concentration, because supermajorities decide outcomes, governance coalitions form, and social coordination exists whether we acknowledge it or not, so the real question is not whether governance exists, but whether it is implicit and deniable or explicit and accountable. Fogo’s model pulls performance governance into the open, including the stated ability to remove validators that consistently underperform and to sanction behavior that harms the network, even in areas like destructive MEV extraction patterns, which is controversial on principle but coherent in a world where you are not optimizing for maximum inclusivity at all costs, you are optimizing for predictable behavior under tight timing constraints.

None of this reads like a bet that retail users will suddenly care about 40ms blocks, because retail users don’t wake up thinking about tail latency, and they shouldn’t have to. The bet is that more on-chain activity starts to resemble real infrastructure, where workflows integrate with systems that already have strict SLA thinking—finance, settlement, risk controls, high-frequency coordination environments—and where timing and reliability become part of correctness rather than a nice-to-have. When a chain enters that world, it stops being judged like a community and starts being judged like a system, and the question stops being “can it be fast on a good day” and becomes “does it stay well-behaved on a bad day when volatility spikes, when congestion hits, when the system is forced to operate at the edge of its assumptions.”

That is also why the access layer matters, because a chain can have tight consensus inside a co-located zone and still feel chaotic to the outside world if the read path collapses, if observers experience lag and inconsistency, or if RPC behavior becomes the real bottleneck under bursty traffic. The emphasis on a dedicated, validator-decoupled RPC approach, paired with edge caching so the world can observe the chain quickly even when it is far from the active zone, reads like a practical response to a problem that low-latency chains often discover the hard way: speed at the core means nothing if the edges experience the system as unreliable.

And then there is the application surface, because if you want workloads that behave like systems, you cannot leave user interaction trapped in signature spam and fee friction, especially when the goal is fluid, repeated actions that cannot afford to feel like a ceremony each time. That is where sessions come in, with the idea of a temporary session key, delegated permissions, a recorded session manager on-chain, and sponsored transaction flow through a paymaster model, along with guardrails like restricted program domains, token limits for bounded sessions, and explicit expiry and renewal. It smooths the surface so applications can be built around intent and continuity rather than constant re-authentication, while still acknowledging the trade that sponsorship and onboarding introduce dependencies that are real, especially early on, because abstraction always moves complexity somewhere else—it doesn’t erase it.

When I put all of that together, I don’t see a chain chasing applause with a faster number, and I don’t even see “a faster Solana-style network” as the main story; I see a network treating tail latency like a security boundary and then arranging its architecture, its operational model, and its governance assumptions around that decision. A canonical high-performance validation path reduces variability, zones compress distance, rotation prevents speed from turning into permanent jurisdictional capture, curation enforces operational discipline, a decoupled access layer protects observability under stress, and sessions smooth the application surface so workflows can behave like workflows instead of like rituals.

That doesn’t make it flawless, and in some ways it makes the hard questions sharper rather than softer, because once you build this way you can’t hide behind slogans, and you eventually have to prove that zone rotation remains meaningful on mainnet, that curation doesn’t drift into capture, that monoculture risk is managed rather than ignored, and that incentives in a tight-cadence environment don’t distort validator behavior over time. But those are the right questions, because those are the questions you ask when you are evaluating settlement infrastructure rather than evaluating narrative comfort.

If I’m being honest, what keeps pulling me back isn’t the 40ms target itself, and it isn’t the thrill of shaving milliseconds off a block timer, but the quiet realism in admitting the messy middle and building around it anyway. I’ve seen systems fail without “failing,” drifting into that gray zone where everything is technically correct yet nothing feels trustworthy, and once you’ve lived through that, you stop being impressed by peak performance and start caring about how a system behaves when conditions turn unfriendly.

So when I look at Fogo, I don’t feel like I’m reading a promise, and I don’t feel like I’m reading a pitch; I feel like I’m watching someone attempt to keep the edges sharp on purpose, because they understand that the real test is not the benchmark day, but the ugly day when the world is noisy, the traffic is hostile, the assumptions are stressed, and the chain has to stay well-behaved without demanding applause for it. And maybe that’s the only ending that makes sense here, because if this ever becomes real infrastructure, it won’t be because it was the loudest thing in the room, it’ll be because, on the day it mattered, it held its rhythm so cleanly that nothing around it had to think about it twice.