The last few weeks have felt like watching everyone race to ship the same promise with different fonts. Faster blocks. Shorter charts. Cleaner screenshots. I can respect performance. What I no longer trust is the idea that performance is the same thing as a calmer experience.

I didn’t rush to praise or dismiss FOGO when I first saw it summarized as fast SVM. I still can’t claim I’ve watched it through enough ugly edge conditions to call it proven. But one design question kept sticking because it decides whether a chain becomes a place people ship products, or a place people ship countermeasures.

Where does the incentive to probe your edges end up living.

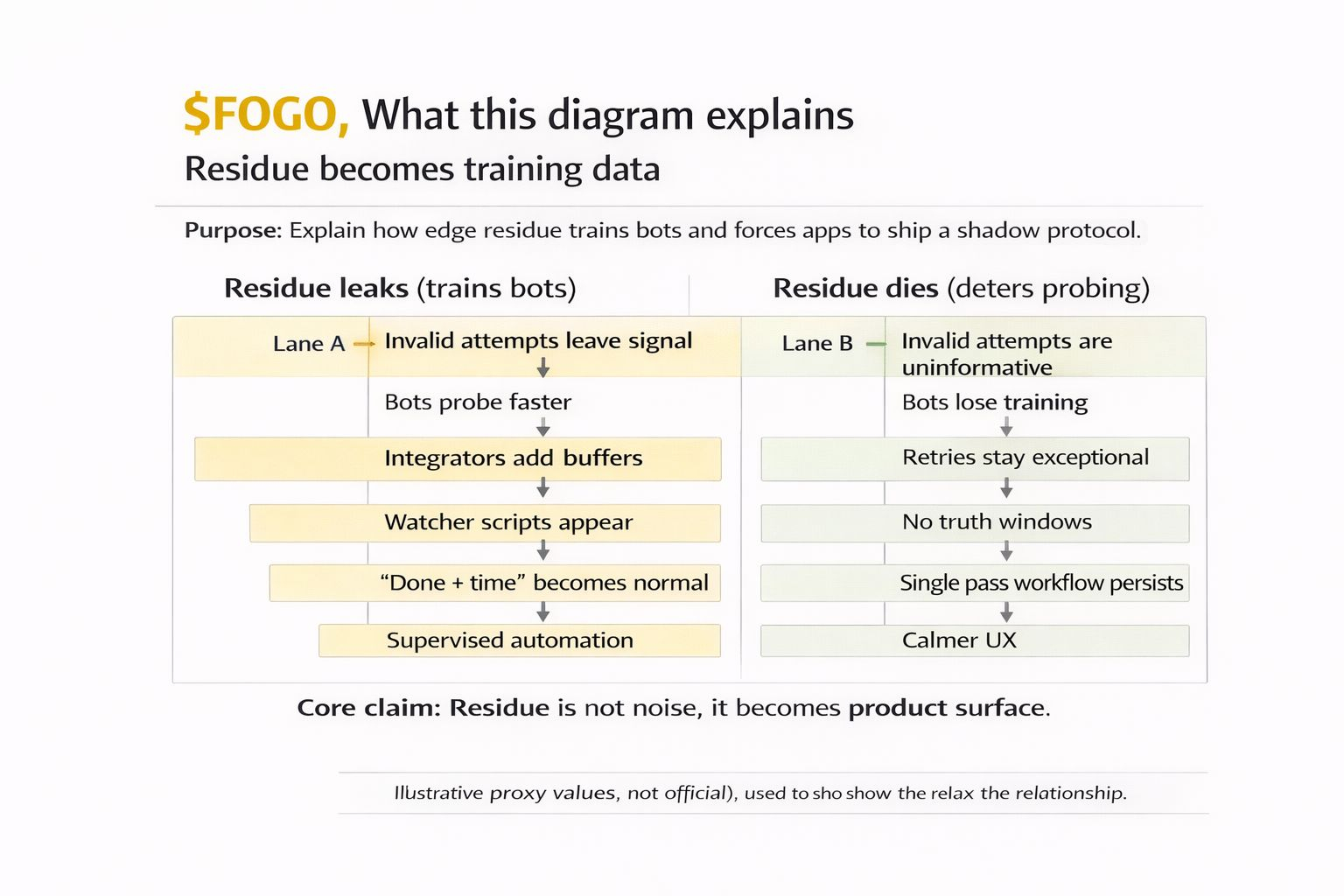

Most people talk about bots like they are an external enemy. In practice they are a mirror. Bots don’t invent ambiguity. They locate the seams where your system is undecided, then they turn those seams into routine. If a chain lets almost valid attempts leave behind signals, bots will learn the shape of those signals. If an invalid attempt can be retried into eventual success, bots will treat persistence as strategy. And once persistence becomes strategy, builders start assuming it too, quietly, until it becomes normal UX.

That is the hidden tax. Not the presence of bots, but the behavioral layer they cause everyone else to build.

Residue is not noise. It is training data.

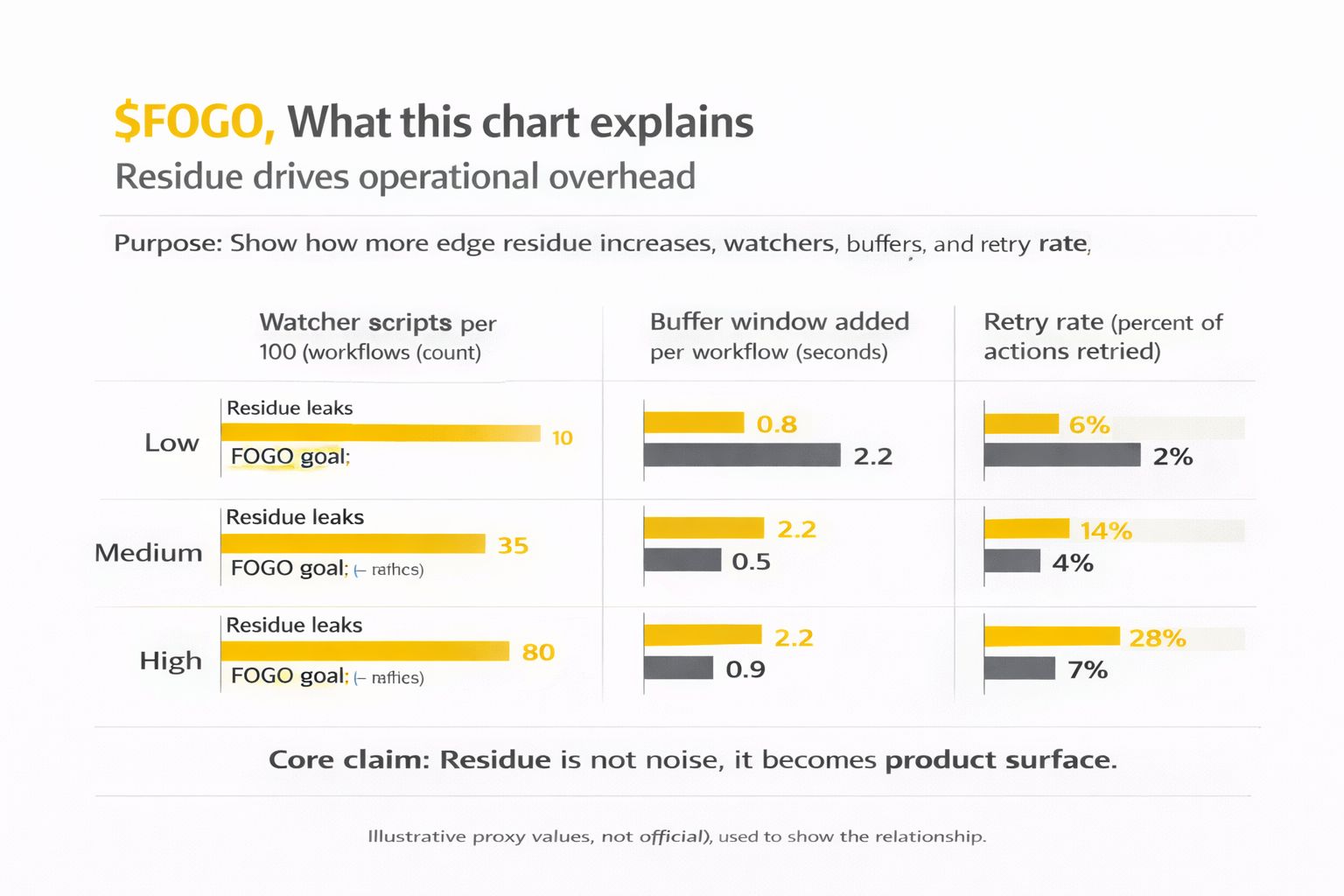

You can watch it happen in slow motion on most stacks. Nothing dramatic breaks. Blocks keep coming. Transactions still land. But the application layer grows a shadow protocol. A retry branch here. A delay window there. A watcher service that waits for enough agreement. A reconciliation job that runs after the first success event because success stopped feeling final on first sight. Each piece is rational in isolation. Together they are a confession. The system is no longer giving the ecosystem one clean definition of accepted. It is forcing everyone to negotiate their own.

Bots love negotiated systems. Negotiation means multiple valid paths. Multiple valid paths mean advantage goes to whoever can explore them fastest.

So the axis I care about here is residue.

When an invalid attempt happens, does it vanish cleanly, or does it leave enough trace that the ecosystem can react, learn, and monetize. Residue can be on chain as partial state. It can be in transient acceptance windows where observers disagree just long enough to matter. It can be in retry pricing and mempool dynamics. The form changes. The loop stays the same. The system emits a hint. Bots treat hints as product surface.

Once hints exist, the ecosystem settles into a predictable pattern.

Bots probe edges because probing has positive expected value. Integrators add defensive logic because one bad week taught them to stop trusting first pass outcomes. SDKs add helpful fallbacks. Indexers tolerate gaps. Exchanges bolt on their own confirmation policies. Over time nobody shares a single truth. Everyone shares a family of heuristics. And when truth becomes heuristics, disputes become workload.

This is why high tempo execution cultures make me more cautious, not less. Speed is not only throughput. It is propagation. In a slow system ambiguity spreads at human pace. In a fast system it spreads at machine pace. Bots react before operators can interpret. Apps trigger follow on actions immediately. Markets price what they observe, not what you intended. If your boundary leaks, it leaks into a world that can weaponize it in milliseconds.

FOGO is interesting to me if it is trying to run SVM shaped execution without importing a recovery shaped culture as the default operating model. The bet reads like it wants invalid attempts to die without leaving a useful trail. Not just rejected, but uninformative. That is the difference between a chain that deters probing and a chain that trains it.

If the protocol responds by letting half states linger, bots get feedback. The ecosystem learns the wrong lesson.

Try more. Probe harder. Build around the leak.

If the protocol responds by filtering earlier and making invalid outcomes disappear without publishing exploitable signals, that lesson becomes harder to learn.

The operator version of this is not abstract. You see it in the artifacts that appear when a chain is stressed. Do watcher scripts proliferate. Do buffer windows become default. Does done quietly become done plus time. The day done plus time becomes normal, bots have already won, because you’ve admitted acceptance is a range, not a point.

The systems that age best are not the ones that never say yes. They are the ones that can say no quickly, consistently, and without drama. That posture changes incentives. If invalid attempts do not teach, bots lose training. If acceptance closes cleanly, observers don’t disagree long enough to be exploited. If retries stay exceptional, you don’t teach the ecosystem that persistence is legitimacy.

None of this is free. The cost shows up where builders actually feel it.

Shrinking residue usually means giving up some permissiveness. You do not get to treat retries as normal UX. You do not get to rely on almost works behavior as a development shortcut. You end up designing with eligibility and boundary behavior in mind earlier than you wanted to. It is less romantic, and it can slow certain styles of iteration.

Markets rarely reward that early because freedom is an easy narrative. Discipline is not.

But I’ve learned to treat the opposite as its own constraint. A system that feels flexible at the protocol layer can become brutally strict at the operational layer. It forces builders to carry uncertainty in their app. It forces integrators to build private buffers. It rewards the teams with the best routing, the best heuristics, the best infrastructure, the best babysitters.

That is not decentralization. That is decentralization of responsibility into the place least suited to carry it.

Now the token, and I mention it late on purpose because it only matters if this posture is real.

If FOGO’s goal is to keep probing from becoming a profitable culture, then enforcement has to stay coherent under adversarial conditions. Coherence costs operating capital. Fees and validator incentives are not decorative. They decide whether the system can keep rejecting cleanly without turning rejection into politics, and whether coordination behavior stays stable when demand spikes.

If $FOGO matters, it should be coupled to the flows that fund that coherence, the budgets that keep boundary behavior boring even when incentives are sharp. If it is not coupled, value leaks elsewhere anyway, into privileged infrastructure and private deals, and the token becomes a badge rather than a claim on the real work.

So I don’t want to end with certainty. I want to end with a criterion I can check without needing insider context.

The next time FOGO is pushed hard, I’ll look for what does not appear. No sudden growth of watcher scripts. No widening buffer windows baked into SDKs. No quiet acceptance ladders showing up in every serious integration. No new folklore explaining why first pass success can’t be trusted.

If the ecosystem stays single pass under pressure, then the edge tax is being contained at the protocol level. And that is the kind of performance that actually makes infrastructure feel calmer to humans.