I didn’t start thinking about memory as a blockchain problem. I started thinking about it because I noticed how forgetful most so-called “AI integrations” actually are. You ask a model something, it responds, and then the context evaporates unless you manually stuff it back in. It works, but it feels shallow. Like talking to someone who nods politely and forgets your name the next day.

That discomfort is what made me look at what VanarChain is doing a bit more closely. Not the marketing layer. The architecture layer. And what struck me was that they’re not treating AI like a plugin. They’re treating memory like infrastructure.

Most Layer 1 conversations still circle around TPS numbers and block times. Fair enough. If your network chokes at 20 transactions per second, nothing else matters. But when I see a chain talking about semantic memory and persistent AI state instead of just throughput, it signals a different priority. It’s almost quiet. Not flashy. Underneath, though, it’s structural.

As of February 2026, Vanar reports validator participation in the low hundreds. That’s not Ethereum-scale decentralization, obviously. But it’s not a lab experiment either. At the same time, more than 40 ecosystem deployments have moved into active status. That number tells me developers are not just theorizing about AI workflows. They’re testing them in live environments, with real users and real risk.

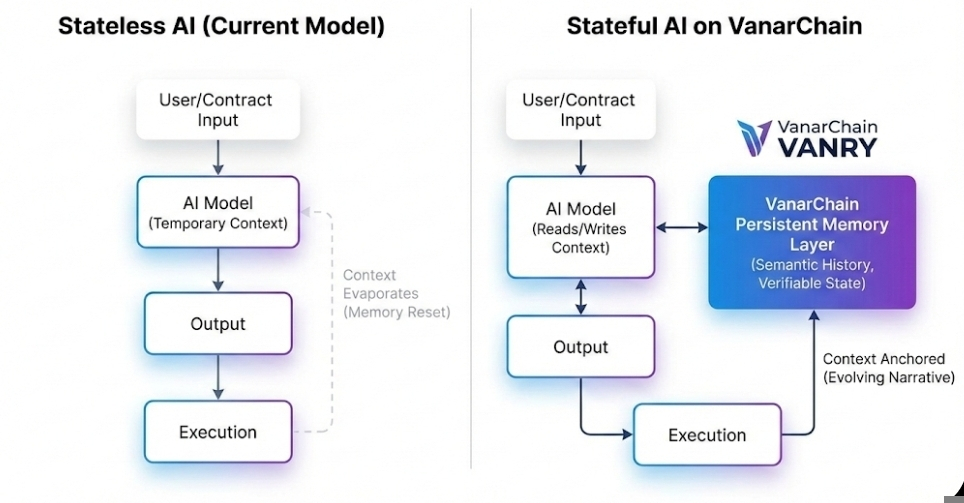

Here’s the part that changed my framing. Most AI on-chain today is stateless. A contract calls an AI model, gets an output, executes something. Done. Clean. Contained. But the system does not remember why it made that decision unless someone explicitly stores it. And even then, it’s usually raw output, not structured reasoning.

Vanar’s direction suggests memory itself should sit closer to consensus. On the surface, that means an AI agent can retain context across multiple interactions. Simple idea. Underneath, it means that context becomes verifiable. Anchored. Not just a temporary prompt window that can be rewritten quietly.

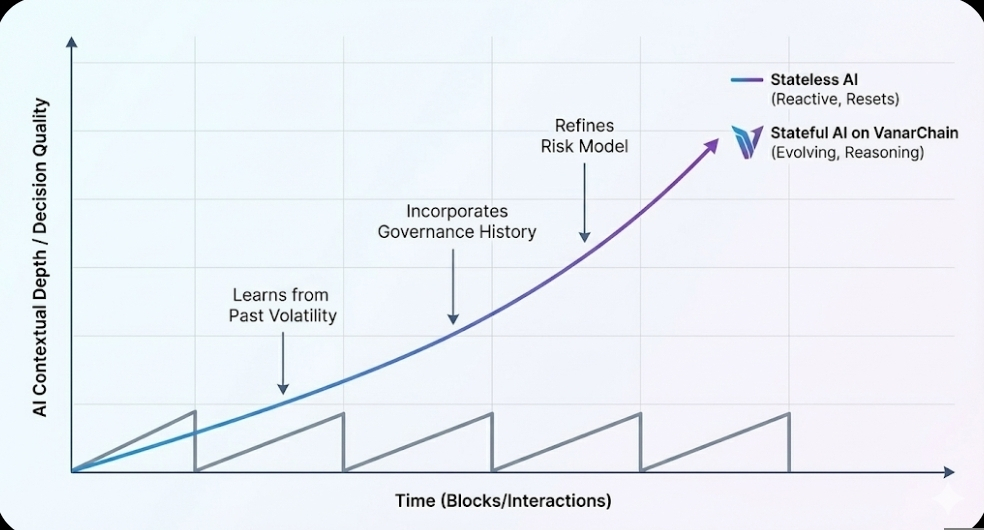

If you think about AI agents handling treasury operations, gaming economies, or machine-to-machine payments, that persistent memory starts to matter. A bot that manages liquidity should not reset its understanding every block. It should remember previous volatility events, previous governance votes, previous anomalies. Otherwise it’s just reacting, not reasoning.

There’s a deeper layer here that I don’t see many people talking about. Memory introduces time into the protocol in a new way. Blockchains already track time in terms of blocks. But AI memory tracks behavioral history. It adds texture. A transaction is no longer just a value transfer. It’s part of an evolving narrative an agent can reference later.

Now, that’s where complexity creeps in. And I’m not pretending it doesn’t. More state means more storage. More storage means heavier validators. If AI agents begin writing frequent updates to their contextual memory, block space pressure increases. With validator counts still in the low hundreds, scaling that responsibly becomes non-trivial. We’ve seen what happens when networks underestimate workload spikes.

Security becomes more delicate too. Stateless systems fail loudly. A bad transaction reverts. A stateful AI system can fail gradually. If someone poisons its memory inputs or manipulates contextual data over time, the distortion compounds. That risk is real. It demands auditing beyond typical smart contract checks.

But here’s why I don’t dismiss the approach.

The market is shifting toward agents. We already have autonomous trading bots arbitraging across exchanges. DeFi protocols experimenting with AI-based risk scoring. Gaming environments where NPC behavior is generated dynamically. If that trajectory continues, the chain that simply executes code quickly might not be enough. The chain needs to host evolving digital actors.

And evolving actors need memory.

When I first looked at Vanar’s emphasis on components like reasoning engines and semantic layers, I thought it might be overambitious. After all, centralized AI providers already manage context. Why duplicate that on-chain? Then it clicked. It’s not duplication. It’s anchoring. A centralized AI system can rewrite logs. A blockchain-based memory layer creates a steady, public foundation for decisions.

That difference becomes critical when financial value is attached to AI actions.

Imagine an on-chain credit system. A stateless AI evaluates a borrower based on current wallet balance and maybe some snapshot metrics. A stateful AI remembers repayment patterns, prior disputes, governance participation. That history changes risk models. It also allows anyone to inspect why a decision was made. The “why” becomes part of the ledger.

Transaction throughput on Vanar remains competitive in the broader Layer 1 landscape, but what stands out to me is the shift in narrative. Technical updates increasingly highlight AI workflows rather than raw speed metrics. That’s not accidental. It suggests the team believes context will become as valuable as bandwidth.

Of course, adoption remains uncertain. Developers often prefer simplicity. Memory layers introduce design complexity and new mental models. Early signs suggest experimentation, not mass migration. Forty-plus deployments is meaningful, but it’s still early-stage. If this model is going to stick, it has to prove that the added weight of stateful AI produces real economic advantages, not just architectural elegance.

Still, zooming out, I see a pattern forming across the space. The first era of blockchains was about moving value. The second was about programmable logic. What we’re stepping into now feels like programmable cognition. Not artificial general intelligence fantasies. Practical, accountable agents operating within economic systems.

If memory becomes a Layer 1 primitive, we will start evaluating networks differently. Not just “how fast is it” or “how cheap is it.” We’ll ask how durable its context is. How inspectable its reasoning trails are. How resistant its memory structures are to manipulation.

That shift is subtle. It won’t trend on social feeds the way token launches do. But it changes the foundation.

What keeps me thinking about this is simple. Machines are slowly becoming participants in markets, not just tools. If they’re going to act with autonomy, they need a place to remember. And the chains that understand that early might not win the loudest headlines, but they could end up shaping how digital systems actually think over time.