I used to think every blockchain pitch sounded the same. Faster blocks. Lower fees. More validators. It all blurred together after a while. You scroll, you nod, you move on. Then I started digging into what Vanar Chain was actually building, and I realized the interesting part wasn’t speed at all. It was this quiet attempt to make the chain think a little.

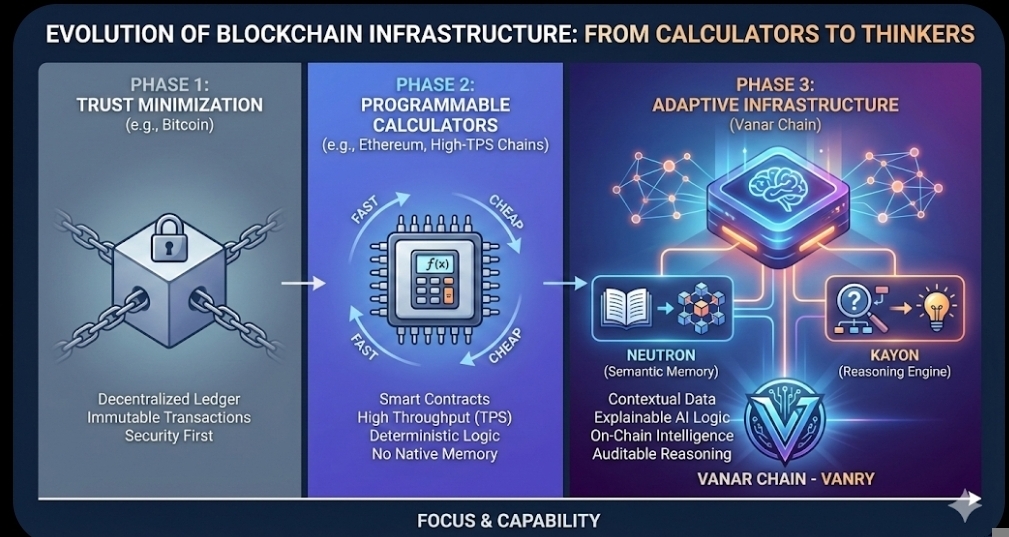

Not in a sci-fi way. Not in the “AI will run everything” kind of noise that floats around X every week. I mean something more grounded. Most blockchains today are programmable calculators. You give them inputs, they execute predefined logic, and that’s it. Clean. Deterministic. Predictable. But real-world systems aren’t that tidy.

What bothered me for a long time about smart contracts is that they don’t remember. A DeFi protocol doesn’t care what happened yesterday unless you manually code that memory into it. There’s no context unless a developer explicitly forces it in. And even then, it feels bolted on.

Vanar’s design leans into that gap. The base layer still does what a base layer should do. It secures transactions, maintains consensus, handles validators. Nothing mystical there. But underneath, they’ve built components like Neutron, which structures on-chain data in a way that AI systems can query more naturally, and Kayon, which focuses on reasoning and explainability.

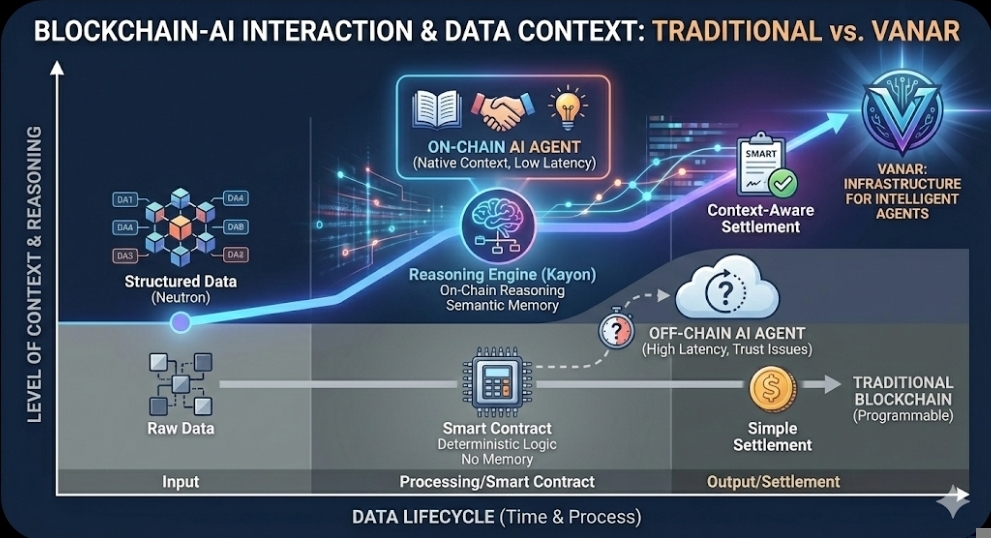

At first I rolled my eyes. “On-chain AI reasoning” sounds like marketing. But when you slow down and unpack it, the idea is less flashy and more structural. If AI agents are going to interact directly with blockchains, they need memory and context. Not just raw storage, but structured storage. There’s a difference.

Think about it this way. In 2025, DeFi platforms were collectively processing tens of billions of dollars in daily volume. That’s huge. But most of those transactions follow repetitive logic. Swap. Lend. Stake. Liquidate. The system doesn’t evaluate nuance. It just follows rules.

Now imagine an AI treasury agent managing liquidity across multiple protocols. If it has to fetch context off-chain, process it somewhere else, then settle back on-chain, you introduce latency and trust assumptions. If parts of that reasoning can live natively within the infrastructure, you reduce that gap. Fewer hops. Fewer blind spots.

That’s the direction Vanar seems to be moving toward. And it explains why they’re emphasizing semantic memory instead of just throughput numbers. We’ve already seen chains brag about 50,000 TPS or sub-second finality. Those metrics matter, sure. But if the chain can’t handle context, it’s still just a fast calculator.

There’s another layer here that I find more interesting. Explainability. Kayon isn’t framed as a black-box AI bolt-on. The reasoning trails can be recorded and audited. In a space where opaque algorithms have caused real damage, that’s not trivial. Remember the oracle exploits in 2022 that drained millions from DeFi protocols because bad data flowed through unchecked systems. When you embed reasoning deeper into infrastructure, you can’t afford opacity.

But I’m not blind to the risk. Adding intelligence to a base layer increases complexity. Complexity increases attack surface. If the reasoning layer fails, or is manipulated, the consequences could cascade. That’s the tradeoff. More capability. More fragility.

Still, the timing makes sense. AI tokens and AI-adjacent narratives have ballooned into multi-billion dollar sectors over the past year. Everyone wants exposure to “AI + crypto.” Most projects, though, are layering AI on top of existing chains. They’re not redesigning the foundation around it.

Vanar’s approach feels different because it assumes AI agents will become first-class participants on-chain. Not users. Not tools. Participants. That assumption changes design priorities. Memory becomes a primitive. Context becomes part of consensus logic. Settlement isn’t just about value transfer anymore.

And then there’s the expansion to ecosystems like Base. That move isn’t about hype liquidity. It’s about usage. AI systems don’t live in isolated silos. If your infrastructure can’t operate across networks, it limits its relevance. Cross-chain availability increases surface area. More developers. More experiments. More stress.

Which brings me to the part nobody likes to talk about. Adoption. Architecture diagrams look impressive. Whitepapers sound coherent. But networks are tested by usage spikes, congestion, weird edge cases that nobody anticipated. If by late 2026 we see sustained growth in active AI-driven applications actually running on Vanar’s infrastructure, that will say more than any technical blog post.

Because the real shift here isn’t flashy. It’s philosophical. Blockchains started as trust minimization machines. Replace intermediaries with code. Now we’re entering a phase where code itself might evaluate conditions dynamically. That’s a different mental model.

When I step back, what I see is a subtle transition from programmable infrastructure to adaptive infrastructure. It’s not about replacing deterministic logic. It’s about layering context on top of it. Quietly. Underneath.

Will it work. I don’t know. If AI agents genuinely become economic actors managing capital, executing workflows, negotiating contracts, then chains built around memory and reasoning will have an edge. If that wave stalls, then this design may feel premature.

But one thing is clear to me. Speed used to be the headline metric. Now context is creeping into the conversation. And once developers start expecting a chain to remember and reason, not just execute, it’s hard to go back to vending-machine logic.

That’s the part that sticks with me. Not the branding. Not the TPS. The idea that infrastructure is slowly becoming aware of what it’s processing.