At first glance, Walrus feels like an odd name for a piece of serious infrastructure. Heavy. Slow. Almost prehistoric. But the metaphor fits better the longer you sit with it. A walrus survives by storing energy, by enduring long seasons beneath ice that shifts, cracks, and reforms without warning. The Walrus protocol is built with the same temperament. It is not chasing speed for spectacle or growth for applause. It is designed to endure in a world where data is fragile, political, and increasingly weaponized.

The modern internet pretends that data is permanent, but anyone who has lost access to an account, seen a platform collapse, or watched content quietly disappear knows the truth. Most data today lives at the mercy of centralized systems: cloud providers, compliance teams, executives, jurisdictions. These systems are efficient, but they are also brittle. They fail suddenly, they forget selectively, and they ask users to trade control for convenience. Walrus begins from a different assumption: that memory itself should not belong to any single authority.

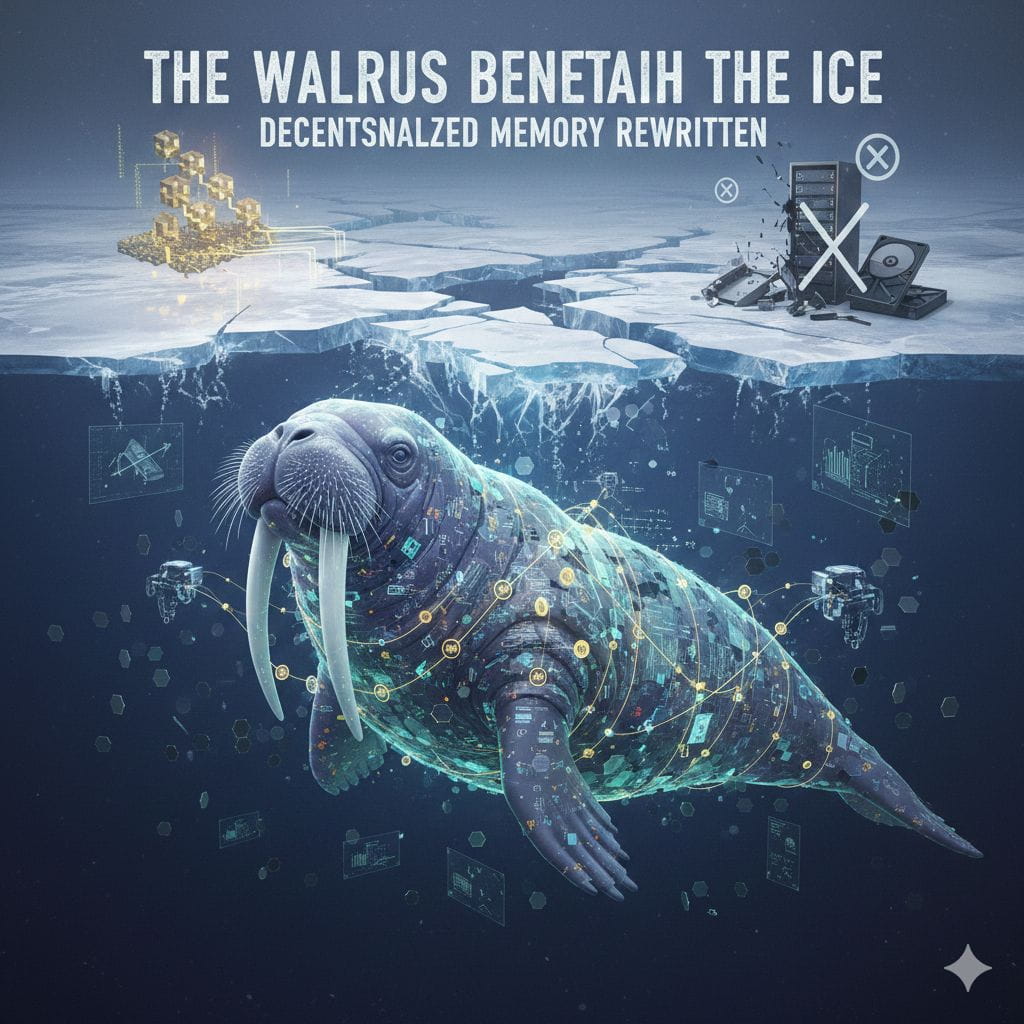

Rather than storing files whole, Walrus breaks them apart. Large datasets are sliced, encoded, and scattered across a decentralized network in fragments that are individually meaningless. No single node holds a full file. No operator can censor or extract content alone. What makes this possible is erasure coding, a technique that transforms data into mathematically redundant shards. Enough shards can reconstruct the original, but many can disappear without consequence. Loss becomes tolerable. Failure becomes survivable. Storage stops behaving like a fragile object and starts behaving like a living system.

This design choice is not just technical. It reflects a deeper philosophy about trust. Walrus does not ask users to trust operators, companies, or institutions. It asks them to trust mathematics, incentives, and verification. Proof replaces permission. Availability becomes something that can be checked rather than promised. In a world saturated with unverifiable claims, that shift is subtle but profound.

The protocol lives alongside the Sui blockchain, not on top of it in the traditional sense. Heavy data does not clog the chain. Instead, the blockchain acts as a coordination layer, a place where commitments, proofs, and economic agreements are recorded with precision. Smart contracts can reference stored data without ever touching the raw bytes. This separation allows Walrus to scale without sacrificing verifiability. It also allows storage to become programmable, something applications can reason about rather than merely consume.

WAL, the native token, flows quietly through this system. It is not there to inspire speculation or symbolism. It exists to bind incentives. Users pay in advance to store data for fixed periods. Storage nodes stake value to participate and are rewarded over time for reliability. Failures are punished. Honesty compounds. The economy is designed to feel boring in the best way possible: predictable, enforceable, resistant to drama. That restraint is intentional. Infrastructure that handles memory cannot afford to be emotional.

Yet beneath the calm surface, tensions accumulate. Decentralized storage introduces uncomfortable questions that centralized systems have conveniently avoided. Who is responsible for illegal or harmful data when no one holds the whole file? What happens when economic power concentrates among large storage operators with better hardware and cheaper bandwidth? How does governance remain legitimate when token ownership drifts toward the wealthy and the patient? Walrus does not resolve these tensions. It exposes them. And exposure, in systems design, is often the first step toward resilience.

The real significance of Walrus may emerge not from individual users uploading files, but from machines interacting with machines. As autonomous agents and AI systems grow more capable, they will require vast stores of data that are persistent, verifiable, and resistant to manipulation. Training data, model artifacts, simulation records, and decision logs all become liabilities if they can be altered or erased. Walrus offers a substrate where memory can be externalized without surrendering control, where agents can rely on data that outlives any single platform or organization.

There is something quietly radical in that vision. Not the loud radicalism of slogans or revolutions, but the structural kind. The kind that changes defaults. If successful, Walrus nudges the internet away from a model where forgetting is effortless and remembering is fragile, toward one where memory has weight, cost, and durability. It asks builders to think carefully before writing data, because storage is no longer abstract. It has time baked into it. Commitment baked into it.

Still, nothing about this future is guaranteed. Protocols do not win by elegance alone. They are stress-tested by market crashes, legal pressure, hostile actors, and human impatience. Walrus will have to prove that its economic design can survive volatility, that its governance can adapt without capture, and that its technical promises hold under real-world strain. Endurance is not declared. It is demonstrated, slowly.

And perhaps that is the most human aspect of the project. Walrus does not promise immortality. It promises survival through fragmentation, through cooperation, through redundancy. It accepts that parts will fail, that nodes will vanish, that conditions will worsen before they improve. What matters is that the memory, taken as a whole, persists.

Beneath the ice of a noisy, disposable internet, Walrus is building something heavy and patient. A different relationship with data. One that assumes storms, expects pressure, and prepares for long winters. Whether the world will choose to rely on such a system remains uncertain. But the attempt itself reveals something important: we are no longer just building faster networks. We are deciding what deserves to last.