Blockchains were never built to carry weight. They were built to carry truth. At their core, blockchains rely on a mechanism where every participating validator must fully replicate the same state and the same data. This design guarantees integrity and agreement, but it comes at a steep cost. Every additional byte stored multiplies itself across hundreds, sometimes thousands of machines. This model works when the data footprint is small and computation-focused. It collapses the moment real-world data enters the picture.

Modern decentralized applications are no longer lightweight experiments. They deal with images, videos, datasets, executable code, rollup transaction data, and entire application frontends. These are not small state updates. These are heavy binary objects. Forcing full replication of such data across all validators creates an economic and technical bottleneck that no amount of optimization can truly solve.

This limitation has quietly shaped the entire decentralized ecosystem. Instead of storing data where it belongs, applications offload it. They store metadata on-chain and push the real data to centralized servers or fragile hosting layers. The result is an illusion of decentralization. The blockchain says one thing, the data source says another, and the user is left trusting whichever system fails last.

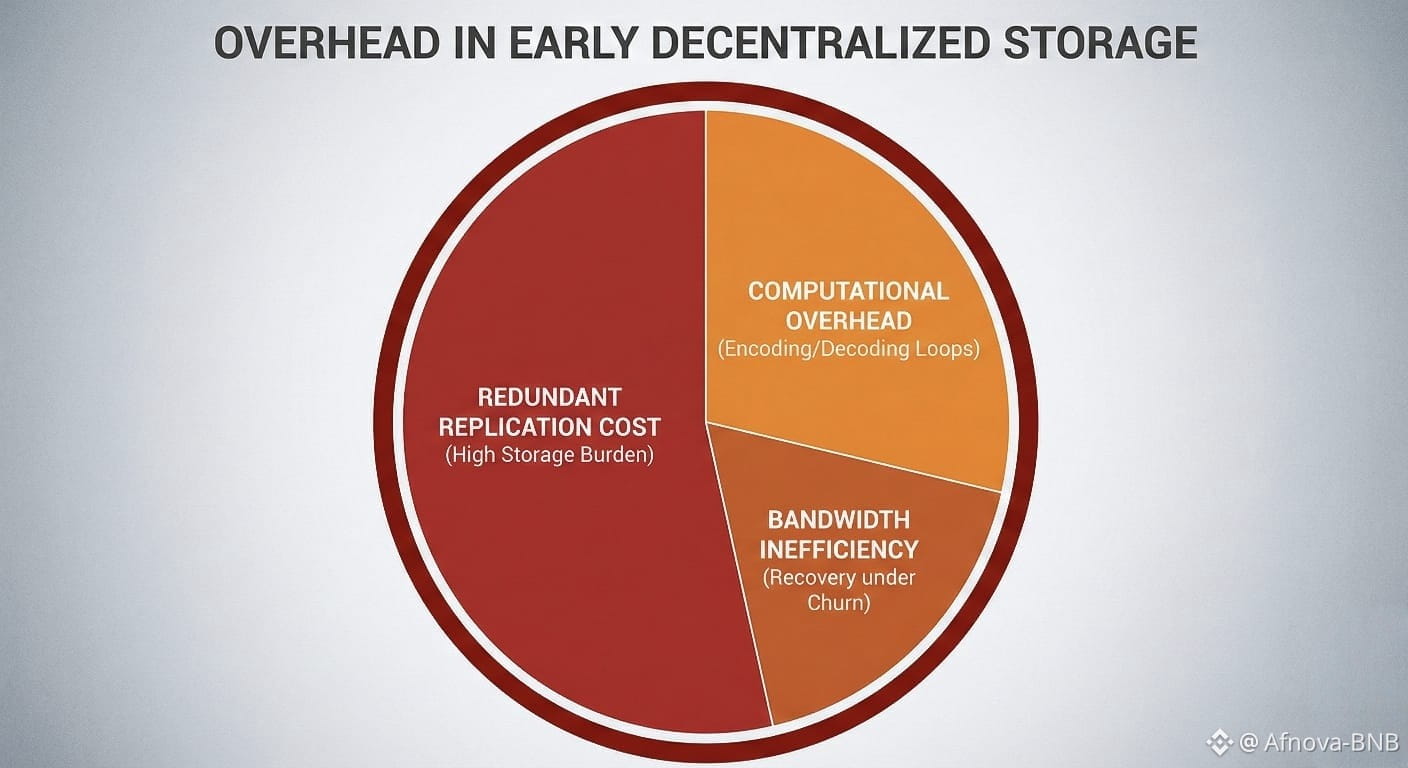

Decentralized storage networks emerged as a response to this imbalance. They promised censorship resistance, availability, and durability without relying on centralized providers. Early designs took the most obvious route: replicate data across many nodes and hope redundancy would cover failures. While effective on paper, this approach proved expensive, inefficient, and vulnerable to manipulation at scale.

A second wave attempted to reduce redundancy through erasure coding, slicing data into fragments so that only a subset is required for recovery. This reduced storage costs but introduced new problems. Encoding and decoding became computationally heavy. Recovery under churn became bandwidth-intensive. A single missing fragment could force a node to download the entire file again. Over time, the savings disappeared.

This is the exact fault line where Walrus enters the picture.

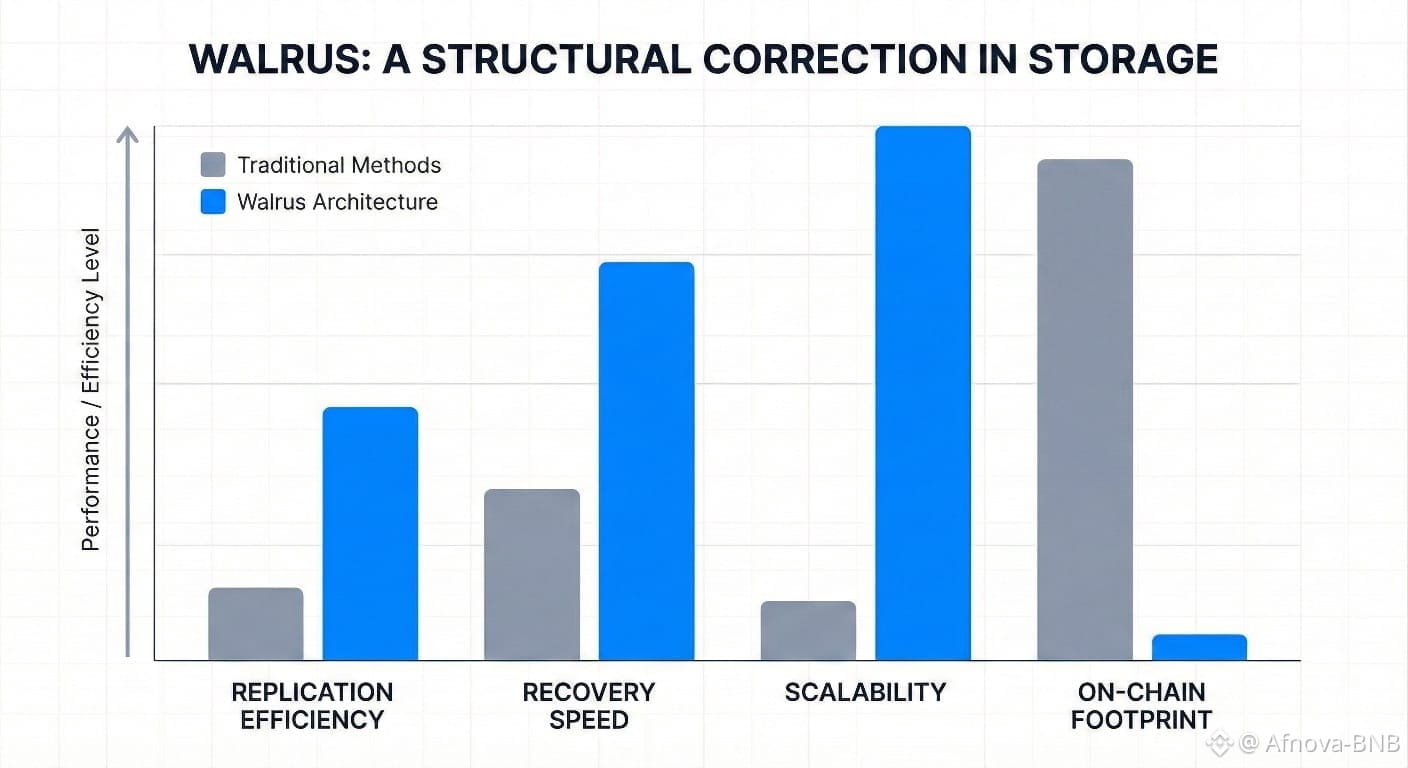

Walrus is not an optimization of existing storage ideas. It is a rethinking of how data should live in decentralized systems. It starts from a simple observation: data storage does not need full replication, but it does need full guarantees. Integrity, availability, consistency, and auditability must be preserved, but replication must be controlled, recovery must be efficient, and scale must be native rather than forced.

Instead of asking blockchains to do what they were never designed to do, Walrus separates responsibilities cleanly. Computation and coordination stay on-chain. Data lives off-chain in a system purpose-built to store it. The result is not a compromise. It is a structural correction.