I remember one afternoon last month when I was trying to push a bunch of AI training datasets onto a decentralized storage system while staring at my screen. There was nothing dramatic about it; it was just a few gigabytes of image files for a side project to test out how agents act. But the upload dragged on, and there were moments when the network couldn’t confirm availability right away. I kept refreshing, watching gas prices jump around, and wondering whether the data would still be there if I didn’t stay on top of renewals every few weeks. It was not a crisis, but that nagging doubt will this still work when I need it next month, or will I have to chase down pieces across nodes? made me stop. When you are working with real workflows instead of just talking about decentralization, these little problems add up.

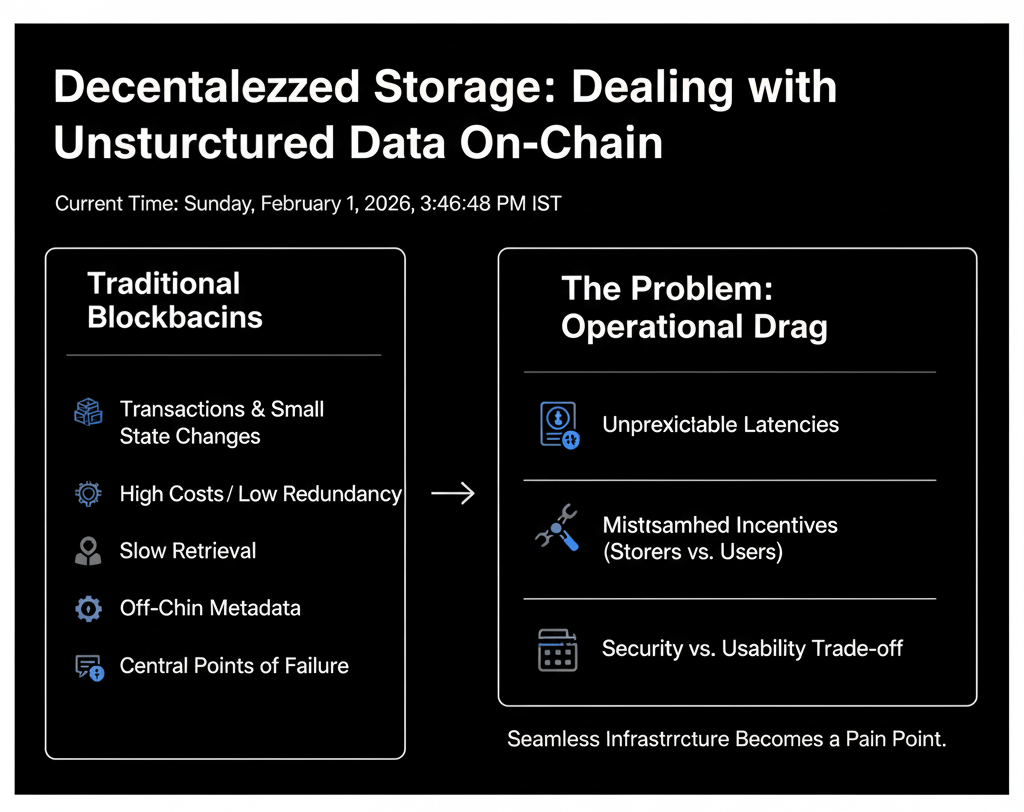

The bigger question is how we deal with big, unstructured data in blockchain settings. Most chains are made to handle transactions and small changes to the state, not big files like videos, datasets, or even tokenized assets that need to stay around for a long time. You end up with high costs for redundancy, slow retrieval because everything is copied everywhere, or worse, central points of failure that come back through off-chain metadata. Users feel like the interfaces are clunky because storing something means paying ongoing fees without clear guarantees. Developers run into problems when they try to scale apps that rely on verifiable data access. It is not that there are not enough storage options; it is the operational drag unpredictable latencies, mismatched incentives between storers and users, and the constant trade-off between security and usability that makes what should be seamless infrastructure a pain.

Picture a library where books are not only stored but also encoded across many branches. This way, even if one branch burns down, you can put together the pieces from other branches. That is the main idea without making it too complicated: spreading data around to make sure it can always be put back together, but without taking up space with full copies everywhere.

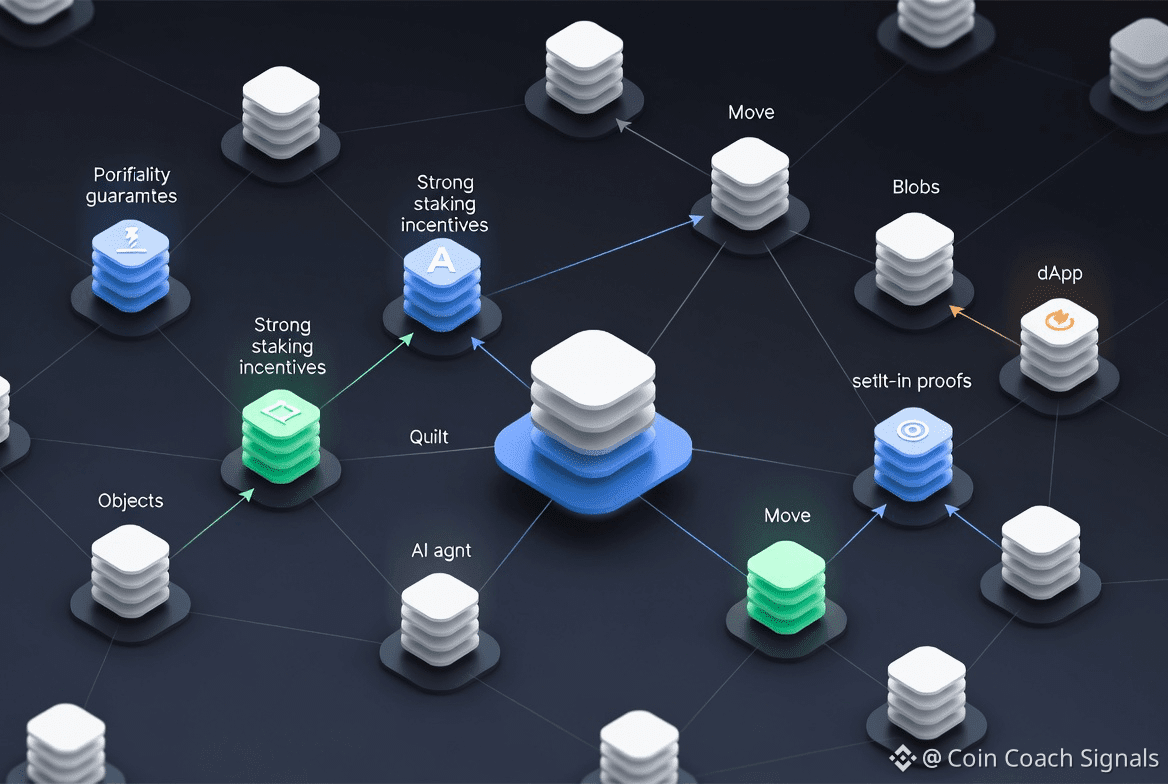

Now, when you look at Walrus Protocol, you can see that it is a layer that stores these big blobs, like images or AI models, and is directly linked to the Sui blockchain for coordination. It works by breaking data into pieces using erasure coding, specifically the Red Stuff method, which layers fountain codes for efficiency.

This means it can grow to hundreds of nodes without raising costs. Programmability is what it focuses on: blobs turn into objects on Sui, so you can use Move contracts to add logic like automatic renewals or access controls. It does not fully replicate on purpose; instead, it uses probabilistic guarantees where nodes prove availability every so often. This keeps overhead low but requires strong staking incentives to stop people from being lazy. This is important for real use because it changes storage from a passive vault to an active resource. For example, an AI agent could pull verifiable data without any middlemen, or a dApp could settle trades with built-in proofs. You won’t find flashy promises like “instant everything.” Instead, the design focuses on staying reliable over time, even under heavy use, such as when Quilt is used to batch many small files and cut gas costs by 100 times or more.

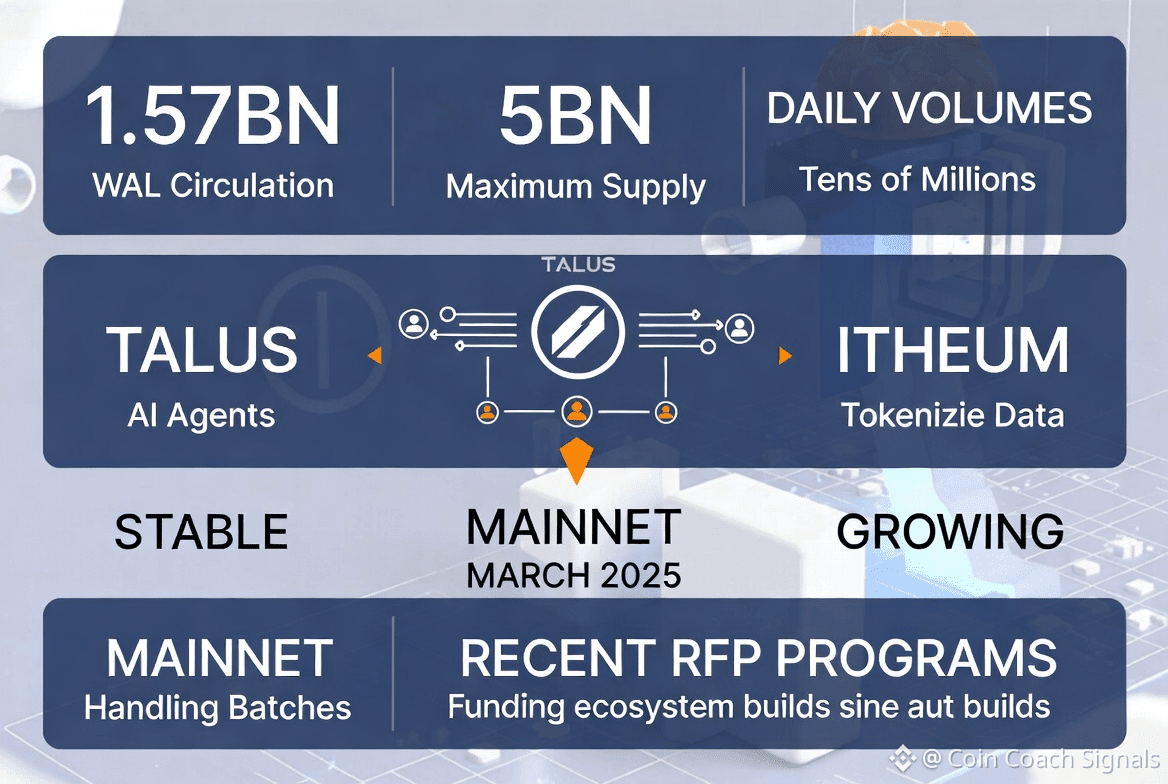

In this setup, the token WAL works in a simple way. Node operators stake through a delegated proof-of-stake model. Node operators stake through a delegated proof-of-stake model. Stakers help secure the network and earn rewards based on how the network performs each epoch. Those rewards come from inflation and storage payments and grow as more storage is used. WAL is also used for governance, allowing votes on things like pricing and node requirements, and it reinforces security through slashing when failures occur, such as data becoming unavailable. Settlement for metadata happens on Sui, and WAL connects to the economy through burns: 0.5% of each payment is taken away, and short staking periods add to that. This is just a mechanism to get operators to hold on to their coins for a long time, not a promise that it will make anyone rich.

For context, the network has seen about 1.57 billion WAL in circulation out of a maximum supply of 5 billion. Recently, daily volumes have been in the tens of millions. This makes it possible to use integrations like Talus for AI agents or Itheum for tokenizing data. Usage metrics show steady growth, with the mainnet handling batches well since it launched in March 2025. Recent RFP programs have also helped fund ecosystem builds.

People chase short-term trades based on stories pumping on a listing and dumping on volatility, but this kind of infrastructure works differently. Walrus is not about taking advantage of hype cycles; it is about getting developers to use it for blob storage by default because the execution layer just works. This builds reliability over quarters, not days. When you look at how prices change around CEX integrations like Bybit or Upbit, you can really see the difference. On the other hand, partnerships like Pyth for pricing or Claynosaurz for cross-chain assets grow slowly but surely.

There are still risks, though. One way things could go wrong is if Sui's throughput goes up and Walrus nodes have to wait for proofs. This could make a blob temporarily unavailable, disrupting AI workflows that depend on real-time data access. Competition also matters. Well-known players like Filecoin and Arweave could become a serious threat, especially if adoption slows outside of Sui. While Walrus aims to be chain-agnostic over time, it remains closely tied to Sui for now. If it takes too long to integrate with other chains, it might be left alone. And to be honest, there is still a lot of uncertainty about long-term node participation. Will enough operators stake consistently as rewards go down, or will centralization start to happen?

As you think about it, time will show through repeated interactions: that second or third transaction where you store without thinking twice, or pull data months later without friction. That is when infrastructure goes into the background and does its job.

@Walrus 🦭/acc