I have been playing around with these blockchain setups for a while now, and the other day it hit me again how much of this stuff does not work when you try to use it every day. It was late at night, and I was trying to get through a simple transaction on one of those "scalable" chains when I should have been sleeping. It was nothing special; I was just asking some on-chain data for a small AI model I was working on as a side project. But the thing dragged on for what felt like forever—fees went up and down in ways that did not make sense, and the response came back all messed up because the data storage was basically a hack job that relied on off-chain links that did not always work right. I had to refresh the explorer three times because I was not sure if it would even confirm. In the end, I lost a few more dollars than I had planned and had nothing to show for it but frustration. Those little things make you wonder if any of this infrastructure is really made for people who are not just guessing but are actually building or using things on a regular basis.

The main problem is not a big conspiracy or a tech failure; it is something much simpler. Blockchain infrastructure tends to break down when it tries to do more than just basic transfers, like storing real data, running computations that need context, or working with AI workflows. You get these setups where data is pushed off-chain because the base layer can not handle the size or the cost. This means you have to rely on oracles or external storage, which can fail.

In theory, transactions might be quick, but the fact that confirmation times are always changing during any kind of network activity, or that costs change with token prices, makes things a constant operational headache. Users have to deal with unreliable access, where a simple question turns into a waiting game or, worse, a failed one because the data is not really on-chain and can not be verified. It is not just about speed; it is also about the reliability gap—knowing that your interaction will not break because of some middle step—and the UX pain of having to double-check everything, which makes it hard to get into the habit of using it regularly. Costs add up too, but not in big ways. They come in small amounts that make you think twice before hitting "send" again.

You know how it is when you try to store and access all your photos on an old external hard drive plugged into a USB port that does not always work? You know it will work most of the time, but when it doesn't, you are scrambling for backups or adapters, and the whole process feels clunky compared to cloud sync. That is the problem in a nutshell: infrastructure that works but is not easy to use for long periods of time in the real world.

Now, going back to something like Vanar Chain, which I have been looking into lately, it seems to take a different approach. Instead of promising a big change, it focuses on making the chain itself handle data and logic in a way that is built in from the start. The protocol works more like a layered system, with the base chain (which is EVM-compatible, by the way) as the execution base. It then adds specialized parts to handle AI and data without moving things off-chain. For example, it puts a lot of emphasis on on-chain data compression and reasoning. Instead of linking to outside files that might disappear or need trusts, it compresses raw inputs into "Seeds," which are queryable chunks that stay verifiable on the network. This means that apps can store things like compliance documents or proofs directly, without the usual metadata mess. It tries to avoid relying too much on oracles for pulling in data from outside sources or decentralized storage solutions like IPFS, which can add latency or centralization risks in real life. What does that mean for real use? If you are running a gaming app or an AI workflow, you do not want to have to worry about data integrity breaking in the middle of a session. The chain can act as both storage and processor, which cuts down on the problems I mentioned earlier, like waiting for off-chain resolutions or dealing with fees that are not always the same.

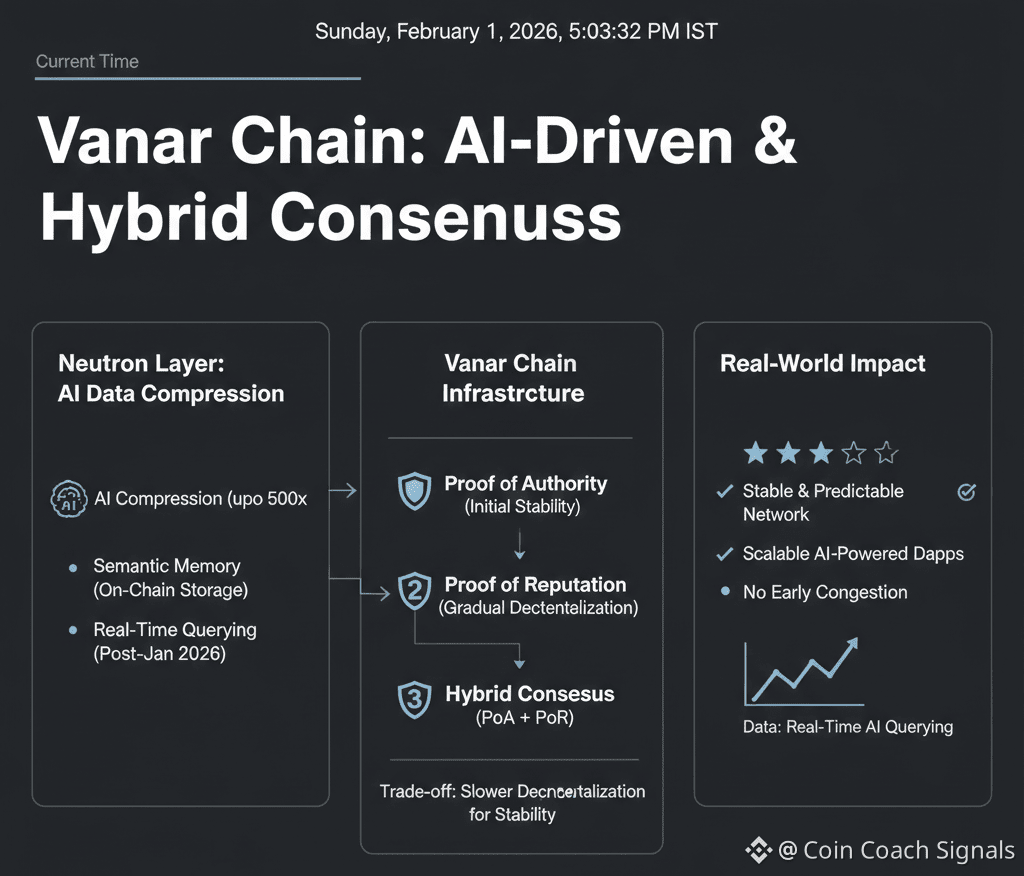

One specific thing about this is how their Neutron layer works. It uses AI to compress raw data up to 500 times its original size, turning it into semantic memory that can be stored and analyzed on-chain without making blocks bigger. That is directly related to how Vanar Chain is acting right now, especially since their AI integration went live in January 2026, which lets users query in real time without needing anything else. Another part of the implementation is the hybrid consensus. It starts with Proof of Authority for stability, where chosen validators handle the first blocks. Then, over time, it adds Proof of Reputation, which scores nodes based on performance metrics to gradually decentralize without sudden changes. This trade-off means that full decentralization will happen more slowly at launch, but it also means that you will not have to deal with the problems of early congestion that can happen in pure PoS setups when validator sets get too big.

The token, VANRY, works simply in the ecosystem without any complicated stories or explanations. It is used to pay for gas fees on transactions and smart contracts, which are always around $0.0005 per standard operation, so costs are always the same, no matter how volatile the token is. Staking is a way to keep the network safe. Holders can delegate to validators and get a share of the block rewards, which are given out over 20 years. Everything settles on the main chain, which has block times of about 3 seconds. There is no separate settlement layer. Governance works through a DPoS model, where VANRY stakers vote on upgrades and settings. Validators and the community make decisions about network security, like changing emission rates or validator criteria. This is also true for security incentives, as 83% of the remaining emissions (from the 1.2 billion that have not yet been released) go directly to validators to encourage them to participate reliably. We can not say what that means for value, only how it works to keep the network going.

Vanar Chain's market cap is currently around $14 million, and there have been over 12 million transactions on the mainnet so far. This shows that there is some activity going on without the hype getting out of hand. Throughput can handle up to 30 million in fees per block, which makes sense since they focus on apps that get a lot of traffic, like games.

This makes me think about the difference between betting on long-term infrastructure and chasing short-term trading vibes. On the short side, you see people jumping on price stories. For example, a partnership announcement might cause VANRY to go up 20% in a day, or some AI buzz might make the market unstable, which traders can ride for quick flips. But that stuff goes away quickly; it is all about timing the pump, not whether the chain becomes a useful tool. In the long run, though, it is about habits that come from reliability. Does the infrastructure make it easy to come back every day without worrying about costs or speed? Vanar Chain's push for AI-native features, like the Kayon engine expansion planned for 2026 that scales on-chain reasoning, could help that happen if it works, turning one-time tests into regular workflows. It is not so much about moonshots as it is about whether developers get used to deploying there because the data handling works and builds real infrastructure value over time.

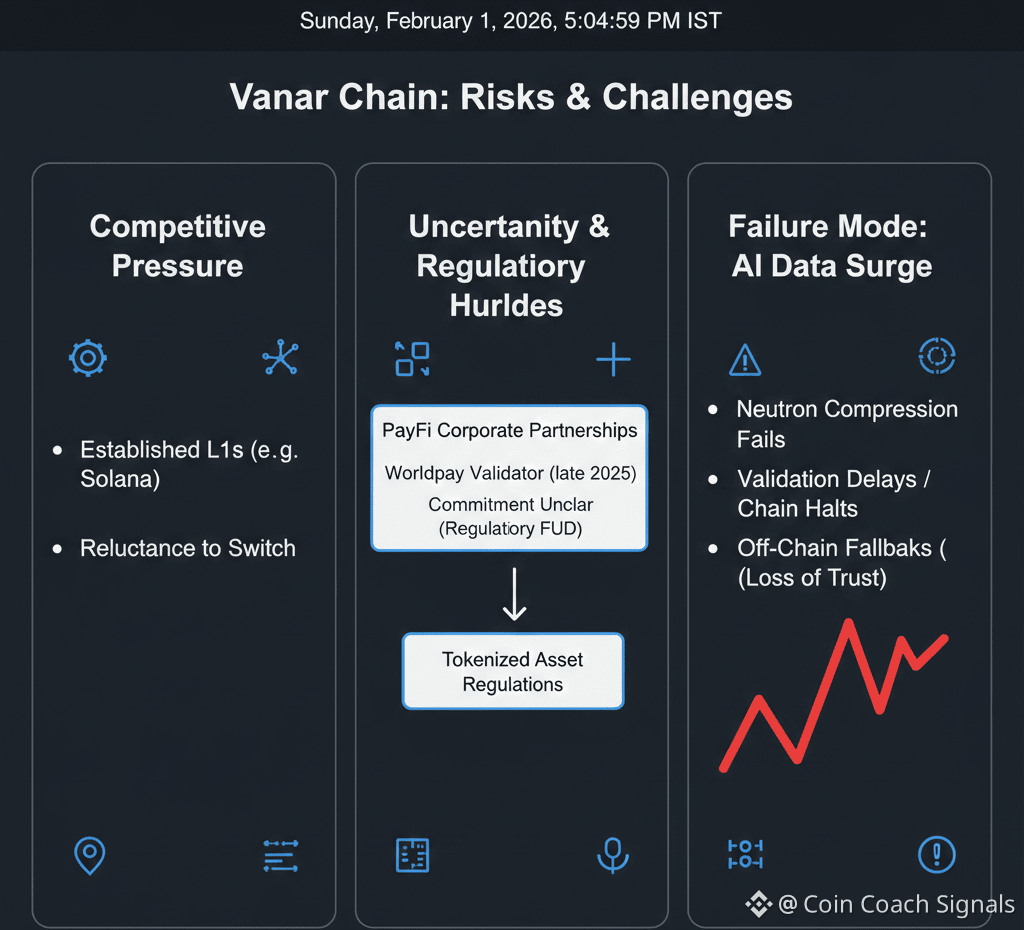

There are risks everywhere, and Vanar Chain is no different. If adoption does not pick up, it could be pushed aside by established L1s like Solana, which already have the fastest gaming speeds. Why switch if your app works fine elsewhere? Then there is uncertainty: even though big companies have recently partnered with PayFi, like Worldpay becoming a validator in late 2025, it is still not clear if they will fully commit to its solutions because of the regulatory problems with tokenized assets. One real failure mode I have thought about is when there is a sudden surge of AI queries. If the Neutron compression can not handle the huge amount of data, it could cause validations to be delayed or even temporary chain halts. This is because the semantic processing might not scale linearly, which would force users to use off-chain fallbacks and damage the trust that the chain is built on.

All of this makes me think about how time tells the story with these things. It is not the first flashy transaction that gets people's attention; it is whether they stay for the second, third, or hundredth one. Does the infrastructure fade into the background so you can focus on what you are building, or does it keep reminding you of its limits? Vanar Chain's recent moves, like the Neutron rollout that lets files be compressed 500 times for permanent on-chain storage, might make it do it again. We will see how things go over the next few months.

@Vanarchain #Vanar $VANRY